From Rider Names to Support Tickets: How Uber’s AI Keeps It Together

Uber’s New Prompt Engineering Toolkit makes it easy to create, test, and use prompts from different models

TL;DR

Situation: Uber needed a centralized system to efficiently create, manage, and deploy prompts from LLMs

Task: Develop a toolkit that streamlines the prompt engineering lifecycle, including exploration, iteration, evaluation, and productionization of prompt templates

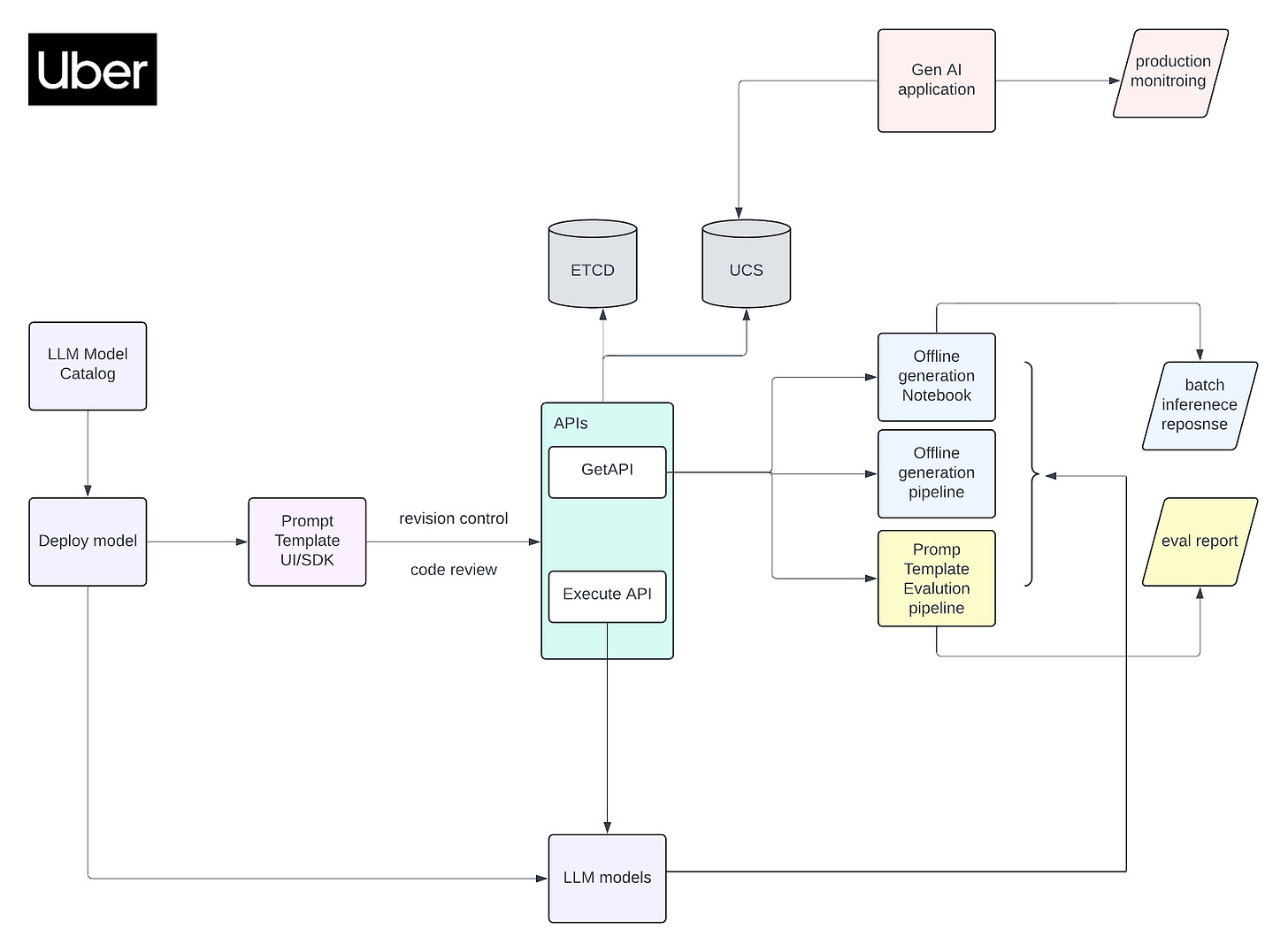

Action: Uber built the Prompt Engineering Toolkit, featuring a model catalog, GenAI Playground, auto-prompting capabilities, version control, and evaluation tools to facilitate rapid iteration and responsible AI usage

Result: The toolkit enabled faster prompt development, reuse of existing prompt templates, and enhanced monitoring of LLM performance in production

Use Cases: User Data Validation, Support Ticket Summarization

Tech Stack/Framework: LLM, LangChain, RAG

Explained Further

Key Features:

Model Catalog: A comprehensive repository detailing available LLMs, including specifications, use cases, cost estimates, and performance metrics.