How Uber Cut Data Lake Freshness From Hours to Minutes With Flink

Why Uber moved ingestion from Spark batch to Flink streaming and what it took to run thousands of jobs reliably at petabyte scale.

Fellow Data Tinkerers!

Today we will look at how Uber moved from batch to streaming in their data lake.

But before that, I wanted to share with you what you could unlock if you share Data Tinkerer with just 1 more person.

There are 100+ resources to learn all things data (science, engineering, analysis). It includes videos, courses, projects and can be filtered by tech stack (Python, SQL, Spark and etc), skill level (Beginner, Intermediate and so on) provider name or free/paid. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get to Uber’s streaming solution

TL;DR

Situation

Batch-based ingestion meant data freshness was hours to days, slowing experimentation, analytics and ML across Uber’s core business domains.

Task

Move ingestion to minutes-level freshness at petabyte scale while lowering compute cost and keeping operations reliable across thousands of datasets.

Action

Built IngestionNext using Flink streaming from Kafka to Hudi, plus a control plane for operating ingestion at scale. Solved streaming bottlenecks (small files, partition skew, checkpoint vs commit alignment) to keep performance and correctness intact.

Result

Freshness improved from hours to minutes-level.

Compute usage reduced by ~25% vs batch ingestion.

Compaction performance improved by ~10x with row-group merging.

Use Cases

Near-real time analytics, personalisation, operational analytics

Tech Stack/Framework

Apache Spark, Apache Kafka, Apache Flink, Apache Hudi, Apache Parquet

Explained further

Why data freshness became a platform priority at Uber?

Uber’s data lake sits underneath a lot of the company’s analytics and machine learning. If a team wants to measure an experiment, monitor performance, train a model or sanity-check a business change, it usually starts with is the data in the lake yet?

Historically, ingestion into the lake was batch-based. Freshness was measured in hours. That was fine when decisions moved at daily report speed. It starts to hurt when the business wants near-real-time loops: faster experiments, faster model iteration, faster detection of issues.

Over the past year, the team built and validated IngestionNext, a new ingestion system that switches the default mindset from batch to streaming. It’s centered on Apache Flink, reads events from Kafka, writes to the data lake in Apache Hudi format and operates at petabyte scale. Along the way, they had to solve the stuff that makes streaming annoying in practice: small files, partition skew, checkpoint vs commit alignment and the operational problem of running thousands of jobs reliably.

Why batch ingestion became a bottleneck?

Two main reasons: freshness and efficiency.

Freshness

As the business sped up, teams across Delivery, Rider, Mobility, Finance and Marketing Analytics kept asking the same thing: “Can we get the data sooner?”

Batch ingestion creates delays measured in hours and sometimes days. That lag slows down iteration and decision-making. In a world of continuous experimentation and fast model cycles, hours of latency is basically a tax on everything.

By moving ingestion to Flink-based streaming, the team reduced freshness from hours to minutes. That directly supports faster model launches, quicker experiments and more accurate analytics because the lake stays closer to what’s happening now.

Efficiency

Batch ingestion with Apache Spark is heavy by nature. Jobs run on a schedule, kick off distributed work at fixed intervals and keep doing that even when the workload is uneven. At Uber’s scale, with thousands of datasets and hundreds of petabytes, that adds up to hundreds of thousands of CPU cores running daily.

Streaming smooths this out. Instead of repeatedly spinning up large batch work, resources can scale with traffic in a more continuous way. Less overhead from scheduling, less big bang compute and more efficient usage overall.

IngestionNext: A streaming ingestion platform built for scale

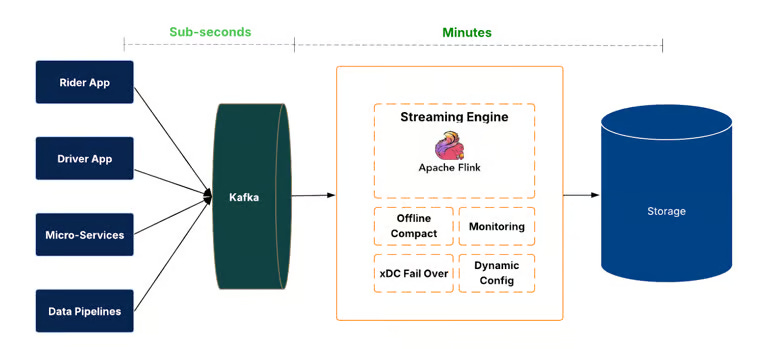

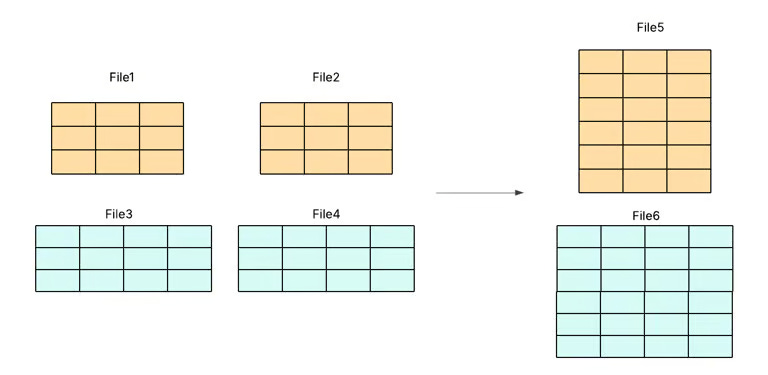

At the data plane, events land in Apache Kafka. Flink jobs consume those events and write them into the data lake using Apache Hudi. Hudi provides transactional behavior like commits, rollbacks and time travel. Freshness and completeness are measured end-to-end from source to sink, not just “did the job run.”

Operating ingestion at this scale is not a set it and forget it situation. So the team built a control plane focused on automation and safety. It manages the ingestion job lifecycle (create, deploy, restart, stop, delete), handles config changes and runs health verification. The goal is simple: run thousands of ingestion jobs consistently without turning the platform into a giant manual babysitting exercise.

The system also supports regional failover and fallback strategies. If there’s an outage, ingestion can shift across regions. If needed, jobs can temporarily fall back to batch mode so ingestion stays available and data is not lost.

Solving the hard parts of streaming ingestion

Streaming buys freshness but it also introduces new failure modes. The team highlighted three major ones: small files, partition skew and checkpoint/commit synchronization.

Small files

Streaming writes data continuously. That tends to create lots of small Parquet files. Small files are a classic way to make query performance worse while also increasing metadata and storage overhead. You get fresher data, then you pay for it every time someone queries.

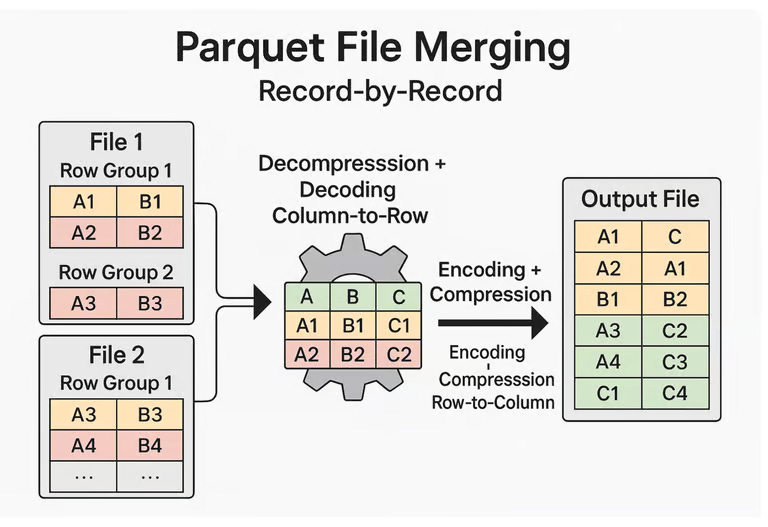

The common compaction approach merges Parquet files record by record. That means each file gets decompressed, decoded from columnar format into rows, merged, then encoded and compressed again. It works but it’s expensive and slow because you keep doing encode/decode work over and over.

To fix this, the team introduced row-group-level merging. Instead of dropping down into row format, the merge operates directly on Parquet’s native columnar structure. That avoids the expensive recompression path and improves compaction performance by more than an order of magnitude, around 10x.

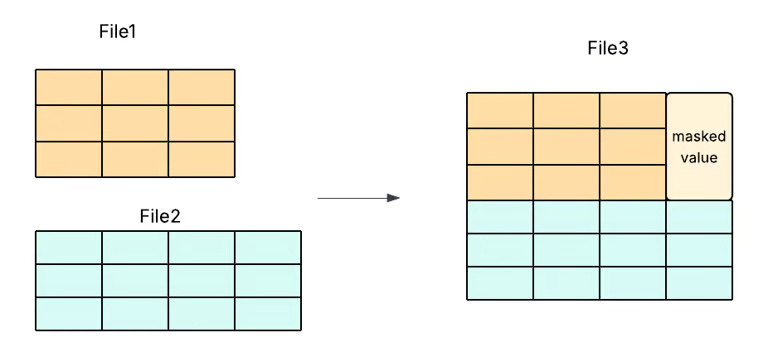

There are open-source efforts exploring schema-evolution-aware merging using padding and masking to align schemas but that comes with added implementation complexity and maintenance risk.

So the team took a simpler path: enforce schema consistency during merging. Only files with identical schema are merged together. No masking, no low-level code modifications, less engineering overhead and still faster, more efficient and more reliable compaction.

Partition skew

Streaming ingestion depends on steady consumption from Kafka across Flink subtasks. The messy reality is that short-lived downstream slowdowns, like garbage collection pauses can unbalance consumption. Some partitions get read more than others. You end up with skew.

Skew doesn’t just look ugly on a dashboard. It can reduce compression efficiency and lead to slower queries downstream.

The fixes came from three angles:

Operational tuning: aligning Flink parallelism with Kafka partitions and adjusting fetch parameters.

Connector-level fairness: adding mechanisms like round-robin polling, pause/resume for heavy partitions and per-partition quotas.

Observability: exposing per-partition lag metrics, adding skew-aware autoscaling and setting targeted alerts.

This is a good reminder that streaming issues often show up first as weird lag and then become why are queries slower now” If you can’t see skew clearly, you’ll chase symptoms forever.

Checkpoint and commit synchronization

Flink and Hudi each track progress but they track different things.

Flink checkpoints track consumed offsets.

Hudi commits track writes.

If failures happen and these drift out of sync, the system can skip data or duplicate it. In ingestion, either outcome is a serious problem.

The team solved this by extending Hudi commit metadata to embed Flink checkpoint IDs. With that linkage, recovery becomes deterministic during rollbacks or failovers. The system can reason about which checkpoint corresponds to which commit and recover without guessing.

Production results: faster data with lower cost

The team onboarded datasets to the Flink-based ingestion platform and validated performance on some of Uber’s largest datasets.

The early results:

Freshness: improved from hours to minutes-level freshness.

Efficiency: 25% reduction in compute usage compared to batch ingestion.

Extending real-time beyond ingestion

IngestionNext improves ingestion latency from online Kafka into the offline raw data lake. That’s a big step but it’s not the full story.

Freshness still stalls downstream in transformation and analytics layers. If ingestion is minutes but transformation is still slow, the point of decision is still stale.

The next frontier for Uber is extending real-time capability end-to-end: ingestion → transformation → real-time insights and analytics. This matters because Uber’s lake powers a long list of domains: Delivery, Mobility, Machine Learning, Rider, Marketplace, Maps, Finance and Marketing Analytics. Freshness is a cross-cutting requirement.

Conclusion

Uber’s shift from batch to streaming ingestion is a meaningful platform milestone. By re-architecting ingestion around Apache Flink, IngestionNext delivers fresher data, stronger reliability and scalable efficiency across a petabyte-scale lake.

The design is not just run Flink jobs. It includes operational foundations like an automated control plane, resiliency strategies and streaming-specific engineering work: faster compaction via row-group merging, skew controls and deterministic recovery by linking Flink checkpoints to Hudi commits.

The bigger idea is the mindset shift: treating freshness as a first-class dimension of data quality. With IngestionNext proven in production, the next push is clear: bring streaming into downstream transformation and analytics so the company can close the real-time loop, not just ingest data faster.

The full scoop

To learn more about this, check Uber's Engineering Blog post on this topic

If you are already subscribed and enjoyed the article, please give it a like and/or share it others, really appreciate it 🙏

Brilliant deep dive into teh streaming shift. The row-group merging trick is the kind of optimization that makes or breaks real-time at scale, I've seen teams burn compute like crazy doing row-level compaction and never realize why latency kept creeping up. Linking Flink checkpoints to Hudi commits sounds obvious in hindsight, but coordinating those failure boundaries is probabaly the reason most folks just stick with batch ingesion.