How Bolt Reconciles €2B in Revenue Using Airflow, Spark and dbt

A look under the hood of a multi-country finance pipeline that ingests raw data, models discrepancies and reconciles cash flows at scale.

Fellow Data Tinkerers!

Today we will look at how Bolt tracks payments at scale.

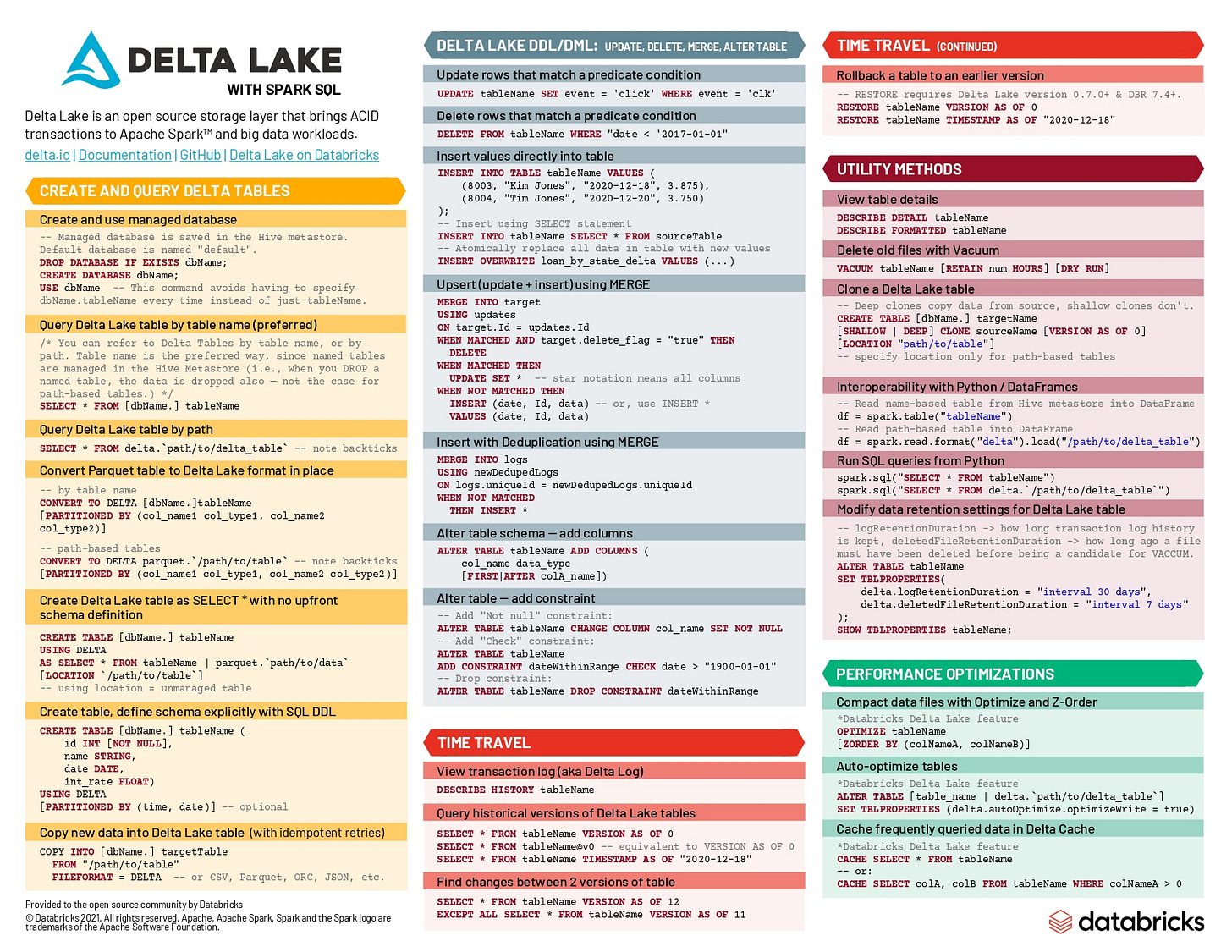

But before that, I wanted to share an example of what you could unlock if you share Data Tinkerer with just 2 other people and they subscribe to the newsletter.

There are 100+ more cheat sheets covering everything from Python, R, SQL, Spark to Power BI, Tableau, Git and many more. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get to how Bolt deals with processing payments from millions of customers

TL;DR

Situation

Bolt operates globally and processes millions of transactions through multiple Payment Service Providers (PSPs) each with their own fee structures, currencies, payout schedules and reporting formats. Tracking where the money goes accurately and at scale is a giant headache

Task

The data engineering team needed to build a scalable, end-to-end system to ingest PSP data, reconcile it with internal records and bank deposits and ensure accurate accounting and financial transparency across all markets.

Action

They built a modular system with six key components:

Ingest raw PSP data via SFTP/API using AWS Batch + Airflow.

Store raw files in S3 untouched (no early cleanup).

Parse to Delta format for querying, forcing all columns to strings.

Clean and standardise data using dbt + Spark, handling duplicates, schema variations and terminology mapping.

Run reconciliation against internal systems and bank data, surfacing mismatches.

Monitor and account for discrepancies with dashboards, alerts and special handling for edge cases like clearing entries or rounding errors.

Result

Bolt now has a reliable, scalable payment tracking system that handles PSP complexity with minimal manual intervention, enables precise reconciliation, improves financial control and supports accurate accounting across business units and countries.

Use Cases

Financial reconciliation, self-serve financial reporting, automated monitoring

Tech Stack/Framework

Apache Airflow, AWS Batch, AWS S3, Delta Lake, AWS Glue, dbt, Apache Spark

Explained further

About Bolt

Bolt is basically Europe’s Uber. They run ride-hailing, scooter rentals, food delivery and even grocery delivery across 45+ countries. That kind of footprint means handling payments is a full-blown data engineering challenge. Every ride, meal or parcel involves a tangled mess of currencies, fees and payout schedules. Reconciling all that? Not exactly a spreadsheet job.

The payment maze

When someone takes a Bolt ride and pays through the app, the money doesn’t just appear into Bolt’s account. It takes a scenic route, hopping between issuing banks, card networks, acquiring banks, Payment Service Providers (PSPs) and finally landing in Bolt’s bank account (minus all the fees taken along the way).

And it doesn’t happen instantly either. Funds land in batches, each one accompanied by a slightly different report format, fee breakdown and settlement timeline depending on the PSP. Multiply that complexity by the number of PSPs Bolt works with globally and you’ve got a reconciliation nightmare if you’re not careful.

So Bolt built a system that keeps track of all this across currencies, time zones, business units and accounting rules. Let’s dig into what that system actually looks like.

Why does this matter?

If you don’t track money accurately, you lose control. Bolt has to reconcile PSP data to figure out:

Where’s the cash? If what hit the bank doesn’t match what you expected, it could mean fraud, bugs or just someone forgetting to upload a report.

When’s the cash coming? Liquidity forecasts fall apart if payout timing is unpredictable.

What are we paying in fees? PSP invoices aren’t always transparent, some charges need to be teased out from the fine print.

How do we allocate cost? The fee comes from PSP as a bulk amount and that one fat PSP fee needs to be split across regions and business lines to understand true profitability.

Is our data pipeline broken? Reconciliation failures can be a canary for technical issues upstream.

To solve all this, Bolt built a data platform that can scale across PSPs and give finance teams confidence in the numbers.

The solution that makes it work

Step 1: getting the data (ingestion)

Everything starts with pulling in reports from PSPs. Most providers offer a transaction reconciliation report which lists all transactions tied to a particular payout: the gross amounts, the associated fees, any adjustments and the total transferred.

Bolt uses two main ingestion modes:

SFTP drops: Bulk files (often CSV) are uploaded to SFTP servers.

APIs: REST endpoints that return transaction and settlement details.

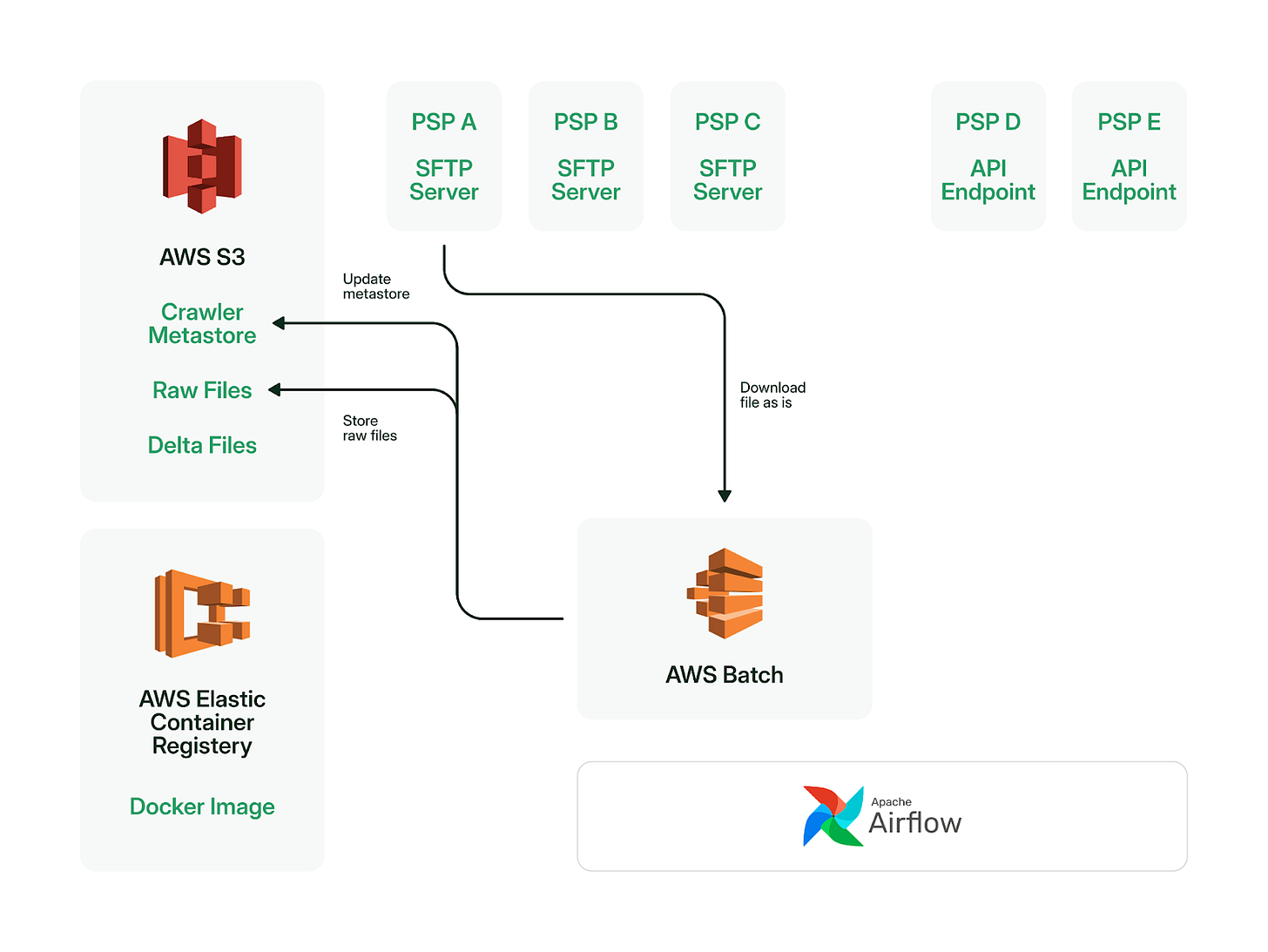

At Bolt, ingestion jobs run on AWS Batch, scheduled via Airflow. Each run spins up a Docker container built for that specific PSP integration. Raw files are saved to S3 and ingestion metadata (what’s been processed) is tracked in a crawler metastore.

SFTP integration

Here’s how it works:

Job reads from a metadata store in S3 (i.e. crawler metastore) to check what’s already been processed.

Connects via SFTP and scans for new/modified files.

Downloads those to disk, uploads them unaltered to S3, updates the crawler metastore and wipes local storage.

This keeps things lightweight, stateless and scalable.

API integration

Almost identical setup. The only difference is instead of listing files on an SFTP server, the job hits an API endpoint and downloads structured data.

There’s no cleaning or parsing done at this stage. Why? Because raw data is king and cleanup happens later. The team also allows duplicate ingestion as deduping is a separate concern.

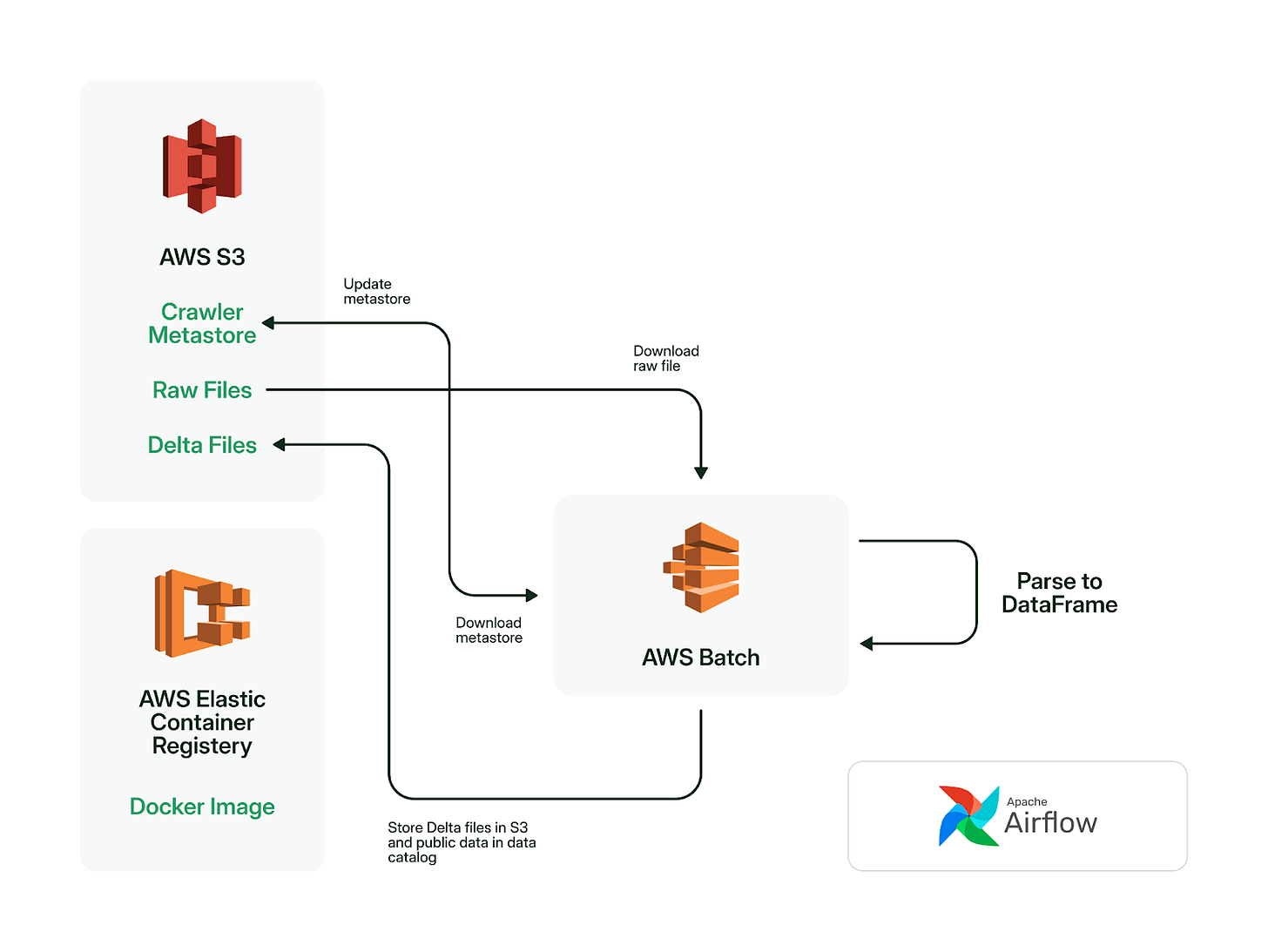

Step 2: from raw files to queryable tables

Raw files aren’t useful until they’re made queryable. That’s where Delta Lake comes in.

Bolt’s system downloads unparsed files from S3, parses them into in-memory DataFrames and writes them back to S3 in Delta format, an open columnar format with ACID-like guarantees and schema evolution.

Once that’s done, the job registers the data with AWS Glue, making it visible to query engines.

To keep this process robust:

All columns are parsed as strings. Data types are handled later.

No transformations are done here either. This job is just about getting files into a readable, structured format.

Step 3: making the data actually useful

3.1 data clean up

At this point, the data is accessible via SQL but it’s still messy:

Duplicate rows

Wrong column types

Inconsistent column names across PSPs

Bolt tackles these issues using dbt on Apache Spark:

Duplicates get removed.

Strings get cast into correct types.

Columns get renamed into something human-readable.

This produces a cleaned staging table.

3.2 data standardisation

Now comes the real magic, standardising PSP reports so they all fit the same schema. Every PSP speaks its own dialect. To make downstream processing easier, Bolt standardises the data into one common language:

Denormalisation: Flatten nested report structures.

Terminology mapping: Convert “PaymentCapture” and “CaptureSettled” and all their cousins into Bolt’s internal “capture.”

Legal entity mapping: Link each merchant account to the right legal entity in Bolt’s org chart.

Granular breakdowns: Split bundled transaction rows into atomic pieces. For example, break one row into multiple ones, each for gross, interchange fee and scheme fee.

Validation: Confirm that payout = gross - fees.

The goal here is to produce a canonical, clean and consistent dataset so the next steps (like reconciliation) can be fully automated, no matter which PSP is involved.

Step 4: reconciliation process

Once everything’s in standardised format, Bolt can compare it with internal records and bank deposits. There are two main reconciliation steps:

4.1 transactional reconciliation

This step compares PSP transactions to Bolt’s internal payment records. Are we seeing the same payments on both sides? If not, something went sideways.

4.2 settlement reconciliation

This step checks that the payout amounts reported by the PSPs match what actually landed in the bank.

Both types of reconciliation are implemented in SQL models via dbt and run in Spark. Outputs are stored as separate tables, ready for reporting or alerting.

Step 5: monitoring reconciliation quality

Reconciliation logic is only half the battle. You also need visibility into how well it’s working.

Bolt set up:

BI dashboards: Show reconciliation rates over time so ops teams can spot trends.

Automated alerts: If the reconciliation rate drops below a threshold, an alert fires and someone gets paged.

The idea is to catch issues early before they snowball into end-of-quarter fire drills.

Step 6: accounting for the “weird” stuff

Last but not least, not everything fits into clean payment – fee = net payout logic. Some scenarios require special handling, including:

Non-fee adjustments: Things like one-time PSP reserves or penalties that weren’t detailed in invoices.

Reconciliation differences: Minor variances (e.g. rounding errors) that get booked as P&L entries.

Clearing entries: Movements between legal entities or currency conversions.

Fee allocation: Break down bulk PSP fees and spread them across business units or geographies.

This part ensures the books match reality and that every last cent is accounted for.

Wrapping it up

Bolt’s payments tracking pipeline is a good example of what it takes to manage financial complexity at scale:

PSPs are inconsistent but the system doesn’t care cause it handles variation by design.

The platform separates concerns: ingest raw, parse cleanly, model consistently and reconcile smartly.

Every step is automatable, auditable and scalable.

When your business runs across countries, currencies and providers, this kind of infrastructure isn’t a luxury, it’s the price of staying solvent.

The full scoop

To learn more about this, check Bolt's Engineering Blog post on this topic

If you are already subscribed and enjoyed the article, please give it a like and/or share it others, really appreciate it 🙏

Keep learning

How Flipkart Scaled Delivery Date Calculation 10x While Slashing Latency by 90%

Flipkart (India’s Amazon) ships millions of orders a day. So how do they show real-time delivery dates for 100+ items in under 100ms? Here's how they pulled it off.

How Notion Brought Order to Its Data Chaos (And Why Their First Catalog Failed)

Notion’s data went from total chaos, wild JSON, missing docs, nobody knowing what anything meant to a system where most events, tables and definitions are actually discoverable (and up to date).

In this article we look at the failures, the lessons and the step-by-step playbook that finally got Notion’s catalog working for their team.