How Flipkart Scaled Delivery Date Calculation 10x While Slashing Latency by 90%

Optimising for 100 items in 100ms without breaking the backend (or the bank)

Fellow Data Tinkerers!

Today we will look at how Flipkart solved the problem of calculating delivery date.

But before that, I wanted to share an example of what you could unlock if you share Data Tinkerer with just 2 other people.

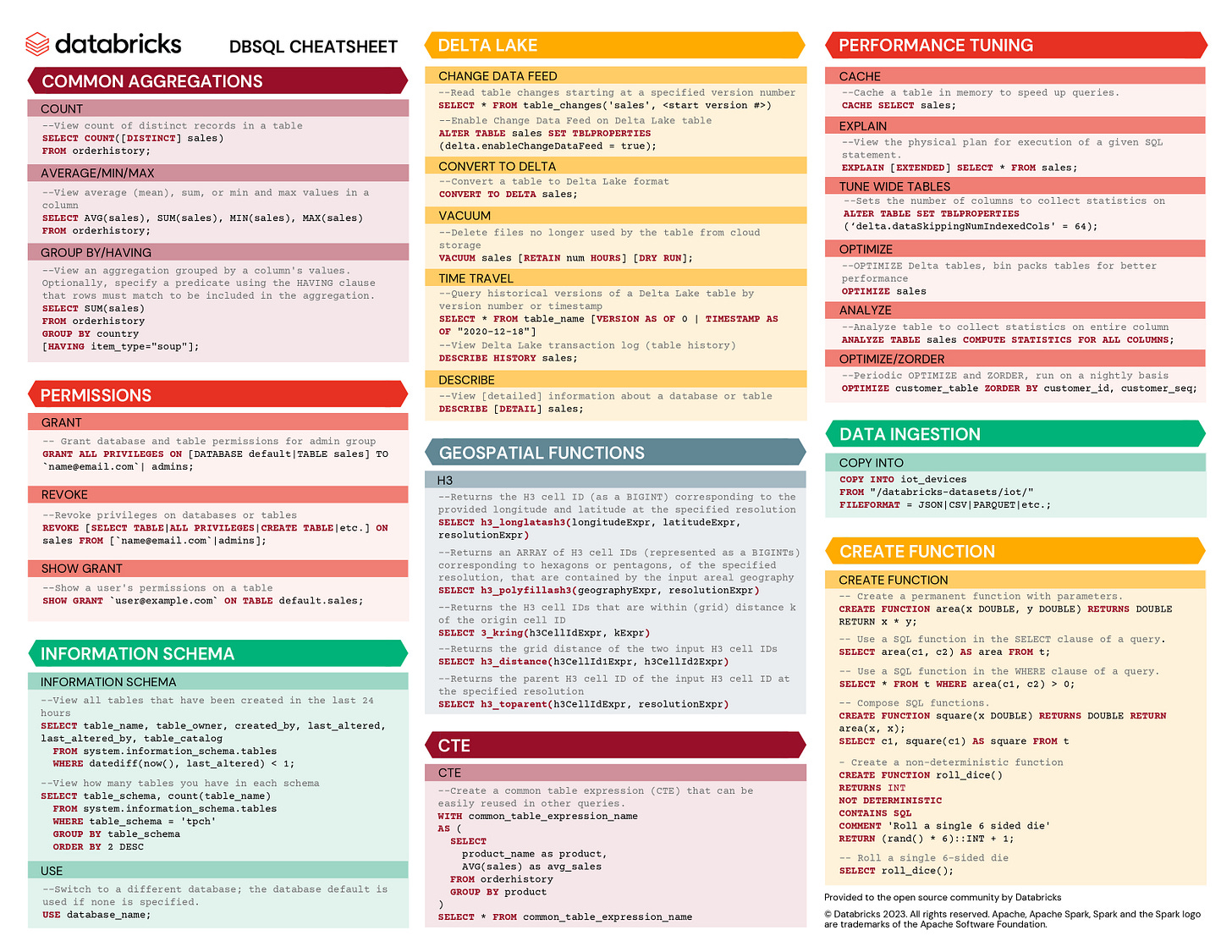

There are 100+ more cheat sheets covering everything from Python, R, SQL, Spark to Power BI, Tableau, Git and many more. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get to Flipkart’s challenge of calculating delivery date at scale