How Expedia Monitors 1000+ A/B Tests in Real Time with Flink and Kafka

A look inside the pipeline that spots underperforming experiments in minutes and not days

Fellow Data Tinkerers!

Today we will look at how Expedia Group monitors A/B tests at a large scale

But before that, I wanted to share an example of what you could unlock if you share Data Tinkerer with just 2 other people and they subscribe to the newsletter.

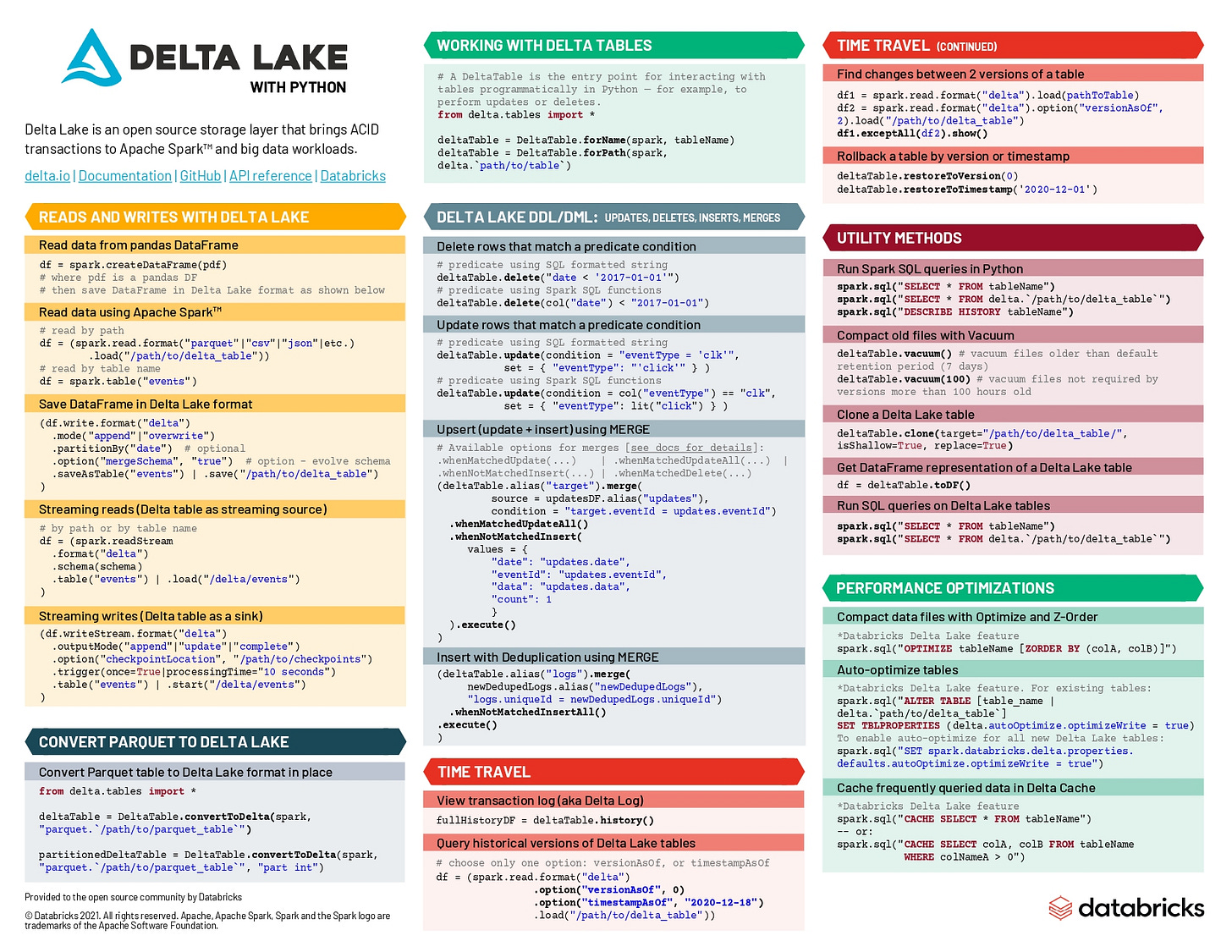

There are 100+ more cheat sheets covering everything from Python, R, SQL, Spark to Power BI, Tableau, Git and many more. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get the real-time monitoring of A/B tests by Expedia