How Netflix Used Deep Learning to Slash Video Quality Control Time by 90%

Neural networks, synthetic pixel generators and a smarter pipeline

Fellow Data Tinkerers!

Today we will look at how Netflix uses neural networks to automate the process of flagging pixel errors

But before that, I wanted to share with you what you could unlock if you share Data Tinkerer with just 1 more person.

There are 100+ resources to learn all things data (science, engineering, analysis). It includes videos, courses, projects and can be filtered by tech stack (Python, SQL, Spark and etc), skill level (Beginner, Intermediate and so on) provider name or free/paid. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get to automation of video quality control by Netflix

TL;DR

Situation

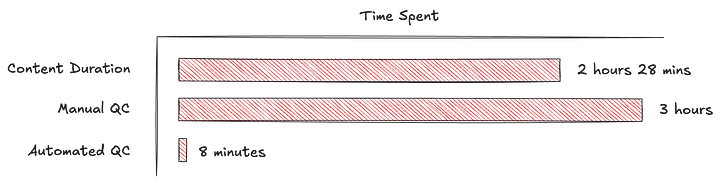

Netflix teams spent hours manually scanning frames for hot pixels which are tiny sensor glitches that distract viewers and cost a lot to fix later.

Task

They needed a way to catch these defects early, accurately and at scale while freeing creative staff from tedious quality control work.

Action

The team built a full-resolution neural net with temporal context, trained first on synthetic pixel errors then fine-tuned on real footage to cut false positives.

Result

Quality control time dropped from hours to minutes with earlier detection, lower costs and more focus on creative storytelling.

Use Cases

Early-stage defect detection, real-time monitoring, synthetic data training

Tech Stack/Framework

Neural networks, temporal windowing

Explained further

Context

At Netflix, the filmmaking process is equal parts creativity and technology. The creative teams bring stories, characters and emotions to life. The technology side tries to make sure nothing distracts from that experience, not even a stubborn bright pixel in the corner of the frame.

The Netflix team’s philosophy is simple: if a task is repetitive, tedious and requires almost no creative judgment, let the machines do it. Freeing up human brains for actual storytelling is the point.

With that in mind, Netflix engineers built a new quality control (QC) method that detects pixel-level artifacts automatically. This reduces the painful manual scanning of every frame in the early QC stages and lets creators focus on, well, creating.

Why pixel errors could not be ignored

Storytelling is fragile. An errant pixel may seem trivial but on a 65-inch 4K display, one bright dot in the middle of a tense scene can pull a viewer right out of the narrative.

Traditionally, production teams had to watch and re-watch every shot, searching for these technical errors. The villains here are tiny bright spots caused by malfunctioning camera sensors also known in the trade as “hot” or “lit” pixels.

They are rare, random and easy to miss. Even with careful inspection, they can slip through and resurface much later in the pipeline. Fixing them at that point is costly and labor-intensive.

By automating this early QC step, Netflix’s system does three things:

Surfaces issues faster before they multiply.

Saves teams from long hours of monotonous review.

Cuts downstream costs by addressing defects early.

The payoff is simple: storytellers can focus on telling stories, not chasing pixels.

Detecting pixel flaws with surgical accuracy

Pixel errors come in two main flavors:

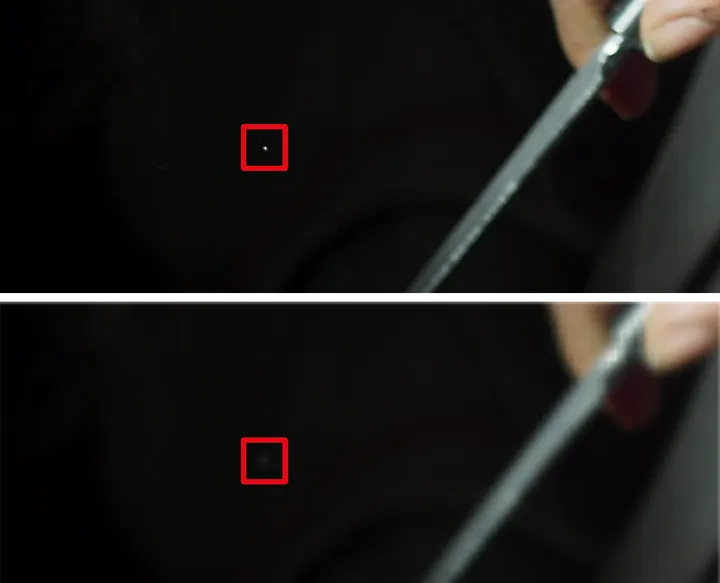

Hot (lit) pixels: isolated bright pixels that appear in a single frame.

Dead (stuck) pixels: sensor pixels that fail to respond to light consistently.

Netflix had already tackled dead pixels in earlier work, using pixel-intensity gradients and statistical comparisons. The new challenge focused squarely on hot pixels, which are harder to detect.

Why? Because they can be vanishingly small, show up briefly and mimic legitimate light sources in a scene. Think of a single reflection, a specular highlight or a twinkling star in the background. They look just like hot pixels unless you have context across frames.

Designing a model for pixel sensitivity

Most computer vision models shrink images during preprocessing. Downsampling reduces input size and speeds up computation but it also washes out tiny anomalies. A hot pixel in 4K can vanish completely once scaled down to 480p.

To fix this, the team built a neural network designed to flag pixel-level artifacts in real time. The key design choices were:

Full resolution input. No downsampling. A 4K frame crushed to 480p makes pixel errors disappear before detection even starts.

Temporal context. The network looks at five consecutive frames at once, learning to distinguish between a sensor glitch (appears once) and real bright objects (like reflections or lights which persist).

Output precision. For each frame, the model generates a pixel-level error map. This isn’t a vague bounding box — it’s continuous-valued maps optimised with pixel-wise loss functions.

The system outputs a dense probability map of pixel errors for each frame, trained by minimising pixel-wise loss functions. At inference time, results are binarised with a confidence threshold, clustered and reduced to centroids that pinpoint the (x, y) coordinates of suspected pixel artifacts.

All of this happens in real time on a single GPU which means the system can keep pace with modern production workflows.

Simulating errors to build better detection

A good model needs training data but here’s the paradox: hot pixels are rare by nature. Across terabytes of footage, they occupy a tiny sliver of space and time. Manually annotating them is impractical.

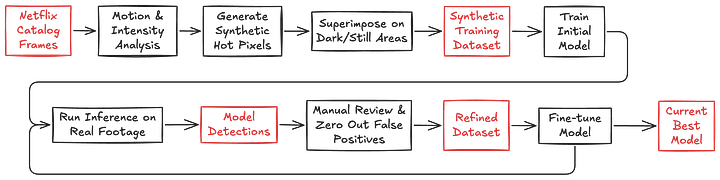

Netflix’s solution was to simulate them. The team designed a synthetic pixel error generator capable of creating realistic artifacts that mimic real-world behavior.

Two Types of Synthetic Errors

Symmetrical errors – Many hot pixels show symmetry along at least one axis. Synthetic versions were generated with that characteristic in mind.

Curvilinear errors – Some artifacts follow faint curves or irregular lines, so the generator also reproduced those shapes.

To train the model, Netflix superimposed these artificial hot pixels onto real frames from their content catalog. They weren’t placed randomly; instead, placement probabilities came from a heatmap that weighted darker, more static areas of the scene where real hot pixels would be most visible.

The result was a large synthetic dataset, diverse enough to bootstrap training without laborious manual labeling. Once the model learned to recognise fake hot pixels, Netflix moved to an iterative synthetic-to-real refinement loop.

Bridging simulation and reality

Synthetic data provided the jumpstart but synthetic alone wasn’t enough. To reach production-level precision, the model needed iterative refinement with real footage.

Here’s how the cycle worked:

Inference on new footage – Run the model on clips without synthetic errors.

Manual false positive review – Flagging hot pixels from scratch is painful, but reviewing model outputs and rejecting false positives is manageable.

Fine-tuning – Feed the cleaned data back into training.

Repeat – Each cycle tightens accuracy, reducing false positives while maintaining sensitivity.

This synthetic-to-real pipeline gave the team a way to steadily improve without waiting years for organic annotations to accumulate. Over time, the false alarm rate fell, while recall stayed nearly perfect. The result is a model that balances high sensitivity (don’t miss errors) with manageable precision (don’t swamp humans with false alarms).

Conclusion

The Netflix team didn’t set out to build the flashiest computer vision model. The mission was practical: make pixel-hunting faster, cheaper and less painful for production teams.

By combining:

Full-resolution neural detection

Temporal context over multiple frames

Synthetic error generation

Iterative fine-tuning on real data

Netflix automated one of the most tedious parts of video QC.

The result is not only fewer hours wasted on manual review but also higher confidence that distracting pixel errors won’t slip into the final cut.

For creators, it means more time and focus for storytelling. For viewers, it means smoother immersion.

The full scoop

To learn more about this, check Netflix's Engineering Blog post on this topic

If you are already subscribed and enjoyed the article, please give it a like and/or share it others, really appreciate it 🙏

Keep learning

How Uber Built an AI Agent That Answers Financial Questions in Slack

Everyone’s talking about AI agents but most examples are still in prototypes or slide decks.

Here’s one running in production at Uber: Finch, a Slack-based AI agent that turns plain-language finance questions into governed real-time answers.

From finding the right dataset to writing the SQL and posting the results, it’s all handled in seconds without leaving chat.

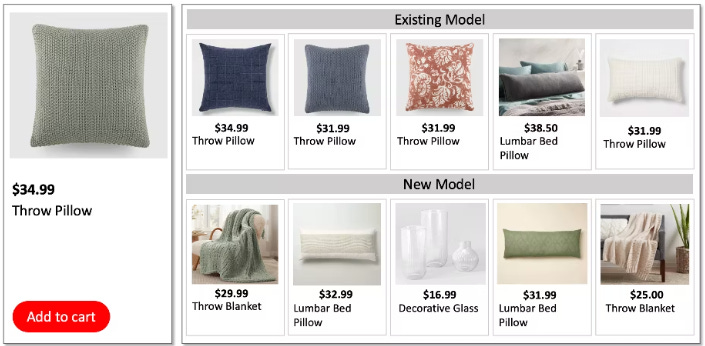

How Target Used GenAI to Lift Sales by 9% Across 100K+ Products

“You might also need …” sounds easy until you try to build it.

Target built a GenAI-powered recommender that dynamically scores 100K+ accessories based on relevance, style and aesthetics.

The result? 9% increase in sales