What the Data Crowd Was Reading in November 2025

Tools, techniques and deep dives worth reading that I came across in November 2025.

Fellow Data Tinkerers

It’s time for another round-up on all things data!

But before that, I wanted to share with you what you could unlock if you share Data Tinkerer with just 1 more person.

There are 100+ resources to learn all things data (science, engineering, analysis). It includes videos, courses, projects and can be filtered by tech stack (Python, SQL, Spark and etc), skill level (Beginner, Intermediate and so on) provider name or free/paid. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Without further ado, let’s get to the round up for November.

Data science & AI

Effective context engineering for AI agents (16 minute read)

Anthropic’s Applied AI team explains that context engineering is now the real bottleneck in agent design - the craft of curating a small set of high-signal tokens so agents stay focused, retrieve what they need just-in-time, and remain coherent across long, complex tasks.Simple, Battle-Tested Algorithms Still Outperform AI (11 minute read)

Jose Crespo argues that companies are wasting hundreds of billions on overhyped AI that delivers negative ROI, while a handful of simple, century-old algorithms plus competent programmers crush it on real business problems.How Can You Identify an Agentic AI Use Case? (13 minute read)

Pierre Petrella explains that an AI agent is an LLM with tools, a clear task and reasoning and gives a practical framework to spot high-value agent use cases in your org by mapping inputs, outputs, tools, scope and playbooks.Gemini 3 Prompting: Best Practices for General Usage (6 minute read)

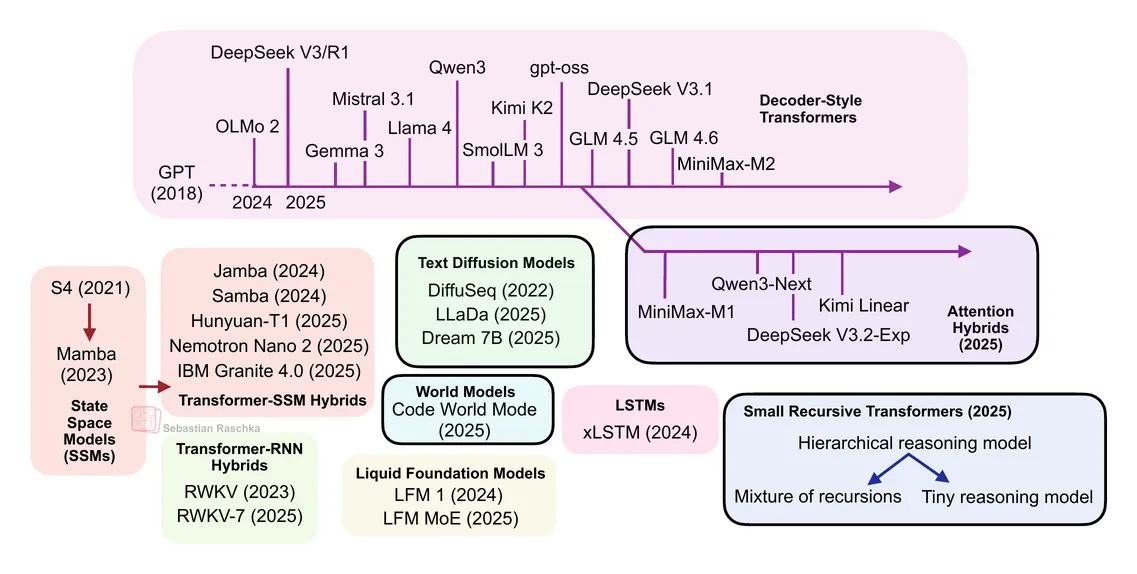

Philipp Schmid from Google Deepmind lays out practical prompting patterns for Gemini 3: be direct, highly structured (XML/Markdown), explicitly plan and self-check and use agent-style tool and domain-specific workflows to get more reliable and high-quality outputs.Beyond Standard LLMs (36 minute read)

Sebastian Raschka, PhD reviews four non-standard LLM directions - linear-attention hybrids, text diffusion, code world models and tiny recursive transformers - and concludes they’re promising niche upgrades but still complements, not replacements for classic autoregressive transformers.RL is even more information inefficient than you thought (11 minute read)

Dwarkesh Patel argues that RL learns far fewer bits per sample than supervised learning so it only becomes efficient once models are already strongThe 1 Billion Token Challenge: Finding the Perfect Pre-training Mix (10 minute read)

Asankhaya Sharma finds that a simple 50-30-20 data mix (finePDFs, DCLM-baseline, FineWeb-Edu) lets a 1B-token GPT-2 run reach >90% of GPT-2 performance using a tenth of the data, outperforming all curriculum strategies.A Visual Introduction to Dimensionality Reduction with Isomap (9 minute read)

Alec Helbling provides a good introduction to dimensionality reduction with visuals (and math)Semantic Layers and the Future of Agentic Analytics (9 minute read)

Andres Vourakis says Agentic Analytics is the next big shift, powered by semantic layers and OSI standards that let AI agents finally understand business context instead of guessing.From Data Analyst to Senior DS Manager at Skyscanner (16 minute read)

Jose Parreño Garcia talks about his rise from data analyst to Senior DS Manager at Skyscanner, what “production-ready” really means and why the real intelligence in data science lives before and after the model.

Data engineering

Is DuckLake a Step Backward? (17 minute read)

Alireza Sadeghi argues that DuckLake revives relational metadata for lakehouses to simplify operations and improve transactional safety but may struggle to scale beyond mid-sized workloads unless the ecosystem and tooling mature.Event Streaming is Topping Out (16 minute read)

Stanislav Kozlovski argues that the real-time streaming market is saturated and shrinking, with Kafka vendors facing price wars, weak growth and looming consolidation even as Kafka itself remains entrenched.

Learnings after 4 years working with +50 companies on data engineering projects (5 minute read)

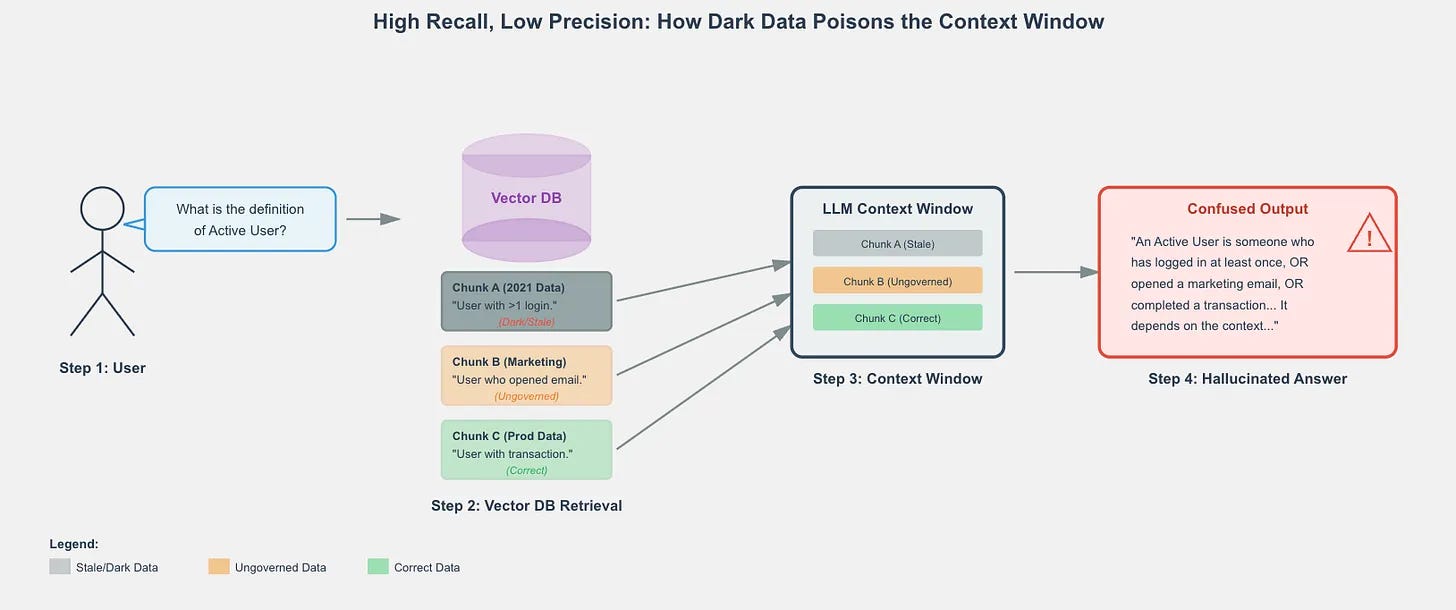

Javi Santana’s takeaway after working with 50+ companies on data: forget the hype, fix your ingestion and schemas, drop unused data and keep your stack simple if you want real speed and savings.The Dark Data Tax: How Hoarding is Poisoning Your AI (10 minute read)

Ananth Packkildurai warns that hoarding data has become a metabolic failure where 90% unused tables bloat costs, degrade AI signal and choke analytics.

What It Really Takes to Move From Senior to Staff Data Engineer (10 minute read)

A good discussion by SeattleDataGuy and a Staff Data Engineer at Apple about what it takes to crack the move to more senior positions.Apache Spark Fundamentals for Data Engineers (11 minute read)

Erfan Hesami explains how Apache Spark works from the ground up, using a simple CSV example to walk through its history, architecture, ecosystem and step-by-step execution.

Iceberg CDC: Stream a Little Dream of Me (15 minute read)

Anton Borisov breaks down how Iceberg’s v3 identity tracking and v4 compact metadata turn messy equality-delete upserts into predictable, low-overhead CDC, especially when paired with a catalog that hands out clean global deltas.

Data Warehouse, Data Lake, Data Lakehouse, Data Mesh: What They Are and How They Differ (14 minute read)

luminousmen breaks down warehouse, lake, lakehouse and mesh and talks about picking the ‘right’ one depending on your biggest bottleneck

650GB of Data (Delta Lake on S3). Polars vs DuckDB vs Daft vs Spark (9 minute read)

Daniel Beach shows that DuckDB, Polars and Daft can chew through a 650GB Delta Lake on a single 32GB node, proving most teams don’t need clusters nearly as often as they think.

Data analysis and visualisation

The Complete Guide to Dashboard Testing: Ensuring Quality and Reliability (7 minute read)

Anastasiya Kuznetsova explains how to bulletproof dashboards end-to-end by validating design, interaction, data pipelines, filters and load before they ever reach stakeholders.

The Data Analyst’s Dilemma: Accuracy vs Speed (7 minute read)

The analyst’s dilemma: knowing when to aim for perfect accuracy and when “good enough” is all the business really needs.

Other interesting reads

Eroding the Edges: (AI-Generated) Build vs. Buy and the Future of Software (12 minute read)

Interesting take by Joe Reis about “good enough” AI-coded apps blowing up the old buy vs. build calculus and pushing engineers to act more like orchestrators rather than builders.The Low-Tech Revolution: Why AI Will Transform the Industries Tech Forgot (32 minute read)

Devansh makes the case that cheap models, edge hardware and agentic software flip the math for thin-margin sectors, making AI adoption inevitable even as platform consolidation threatens to capture most of the value.Benchmark Scores = General Capability + Claudiness (8 minute read)

Greg Burnham shows that most benchmark scores collapse into one ‘general capability’ factor with a smaller ‘Claudiness’ axis on top and argues this hints we’re in a world where broad skills partly generalize but still require targeted, paid-for improvements on specific abilities.

Quick favor - need your take

Was there any standout article or topic from November I missed? Feel free to drop a comment or hit reply, even a quick line helps.

If you are already subscribed and enjoyed the article, please give it a like and/or share it others, really appreciate it 🙏

Thanks for reading 🙏🏼

Thank you for the mention 🙏💐