How Reddit Scans 1M+ Images a Day to Flag NSFW Content Using Deep Learning

A deep dive into the ML pipeline that cut moderation latency from seconds to milliseconds without blowing the budget.

Fellow Data Tinkerers!

Today we will look at how Reddit handles NSFW content at scale using deep learning

But before that, I wanted to thank those who shared Data Tinkerer with others and share an example of what you can unlock if you share Data Tinkerer with just 2 other people.

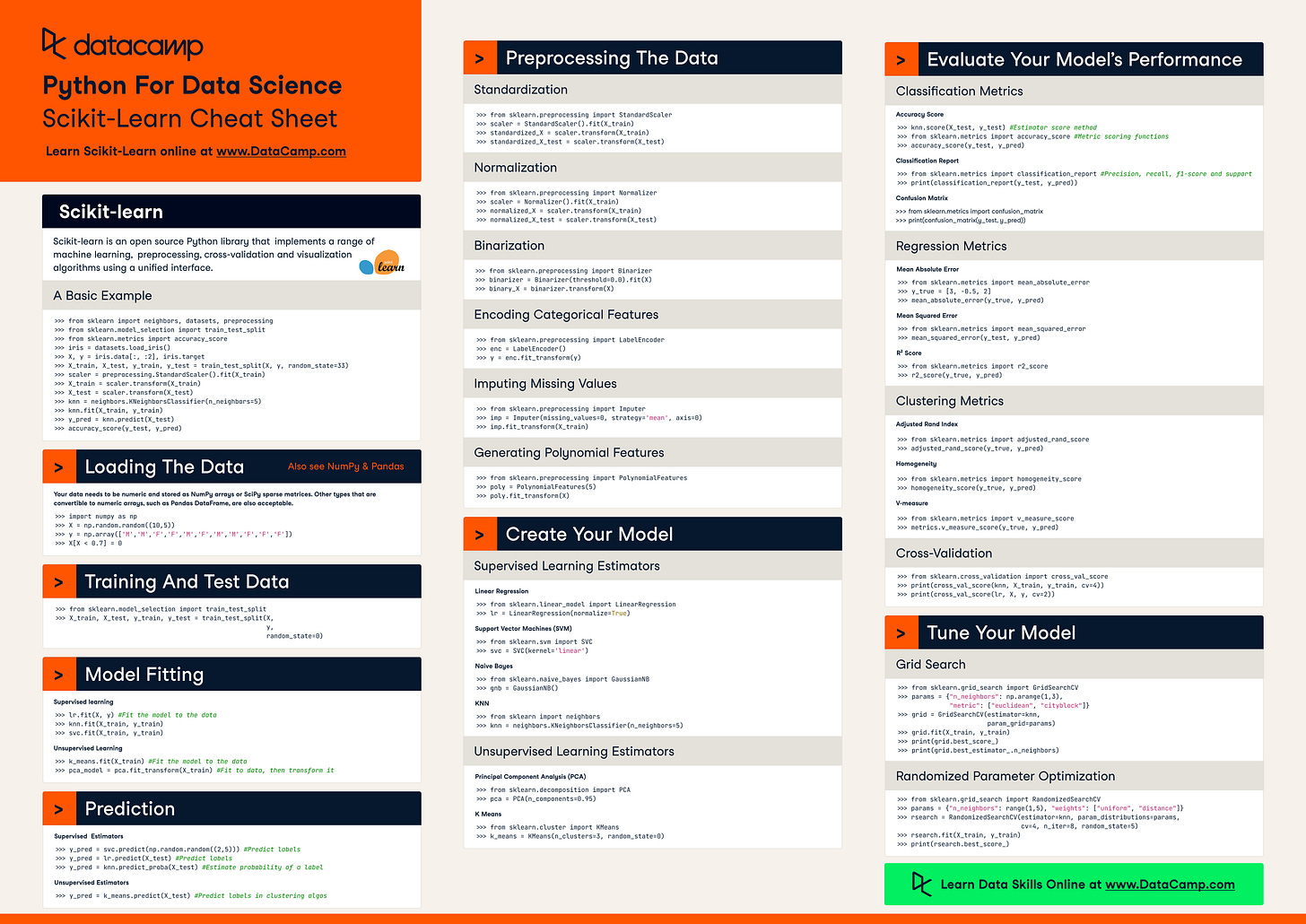

There are 100+ more cheat sheets covering everything from Python, R, SQL, Spark to Power BI, Tableau, Git and many more. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get to Reddit’s automated content moderation