How Uber Built an AI Agent That Answers Financial Questions in Slack

Uber's Finch - the AI agent that finds the right data, runs the query and delivers secure, real-time answers where the team already works.

Fellow Data Tinkerers!

Today we will look at how Uber uses agentic AI to answer financial questions.

But before that, I wanted to share with you what you could unlock if you share Data Tinkerer with just 1 more person.

There are 100+ resources to learn all things data (science, engineering, analysis). It includes videos, courses, projects and can be filtered by tech stack (Python, SQL, Spark and etc), skill level (Beginner, Intermediate and so on) provider name or free/paid. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Now, with that out of the way, let’s get to an actual use case of agentic AI by Uber

TL;DR

Situation

Finance at Uber needed fast answers from big, scattered datasets. Analysts bounced between Presto, IBM Planning Analytics, Oracle EPM and docs. Hand-offs to data teams created delays.

Task

Build a secure, real-time way to get governed financial numbers inside Slack. No new tool sprawl. Scale to many queries and keep auditability and accuracy.

Action

Built Finch, a Slack-native conversational agent that translates plain English to SQL.

Used generative AI + RAG + self-querying agents orchestrated with LangGraph.

Curated single-table data marts and a metadata semantic layer in OpenSearch that stores column/value aliases to fix WHERE-clause precision.

Enforced RBAC, query validation and surfaced real-time status in Slack with optional export to Google Sheets.

Ran continuous evaluation, regression tests and routing accuracy checks; optimised for speed with parallel sub-tasks and prefetching of hot metrics.

Result

Finance asks questions in Slack and gets trustworthy numbers in seconds with the SQL and source visible. Fewer tickets and less context switching, plus better adoption by non-SQL folks.

Use Cases

Conversational AI, personalisation, search relevance, in-chat collaboration

Tech Stack/Framework

LangGraph, OpenSearch, Presto, IBM Planning Analytics, Oracle EPM, Google Sheets

Explained further

Context

At Uber, financial analysts and accountants sift through vast data sets to support real‑time decision making. Traditional data access, often involving complex SQL across multiple platforms, creates delays that can slow critical insights. Those slowdowns add up. Every extra hop between tools risks stale numbers, inconsistent definitions and context switching that drags focus away from actual analysis.

The Uber team built Finch, a Slack-native agent that turns plain English into governed queries and returns results in seconds. It was built to make it faster and safer to get the right number at the right time.

Constraints and goals

The goal was simple: simplify and optimise data retrieval for finance. During the build, the Uber FinTech team evaluated multiple approaches and selected a combination of GenAI, RAG and self‑querying agents. The selection criteria were practical: keep data secure, integrate cleanly with existing systems and scale as usage grows. That meant taking a hard line on role‑based access, auditable behavior and predictable latency.

By using these technologies, Finch gives finance teams real‑time, secure and accurate financial insights inside Slack. No new interface to learn, no jumping between consoles and no waiting in a queue for a teammate to write a query. People ask a question in plain English and get a traceable answer built on governed data.

What were the problems before Finch

Financial analysts rely on data to make decisions. Historically, getting that data was slow and fragile.

Hunting for the right dataset. Analysts log into multiple platforms such as Presto, IBM Planning Analytics, Oracle EPM and Google Docs, trying to find the latest, most accurate source. That back and forth introduces delay and risk.

Wrestling with SQL complexity. If an analyst knows the schema, they can write a query. In practice, that often means cross‑referencing documentation, dealing with joins and filters and troubleshooting syntax or logic. Even experienced people spend time just getting to a correct query.

Waiting on the data queue. If the query is too complex or permissions are missing, a request goes to data specialists. Response times vary. Meanwhile, work waits.

By the time a report is ready, the window for decision making may have shrunk. The team needed a way to simplify retrieval without compromising security or accuracy.

What does Finch actually do?

Finch handles financial data retrieval inside Slack. People use natural language. Finch takes care of the rest.

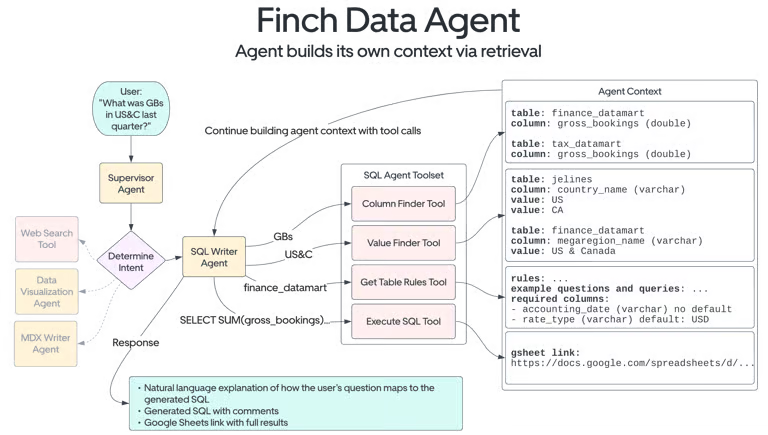

Domain-aware language mapping. Internal finance terms are mapped to structured sources. For example, “US&C” maps to the US and Canada region and “GBs” maps to gross bookings. That context avoids guesswork and keeps answers aligned with shared definitions.

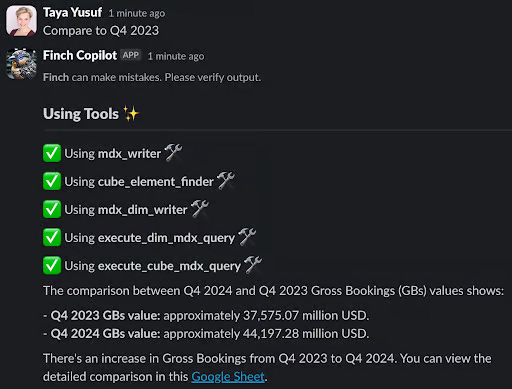

Chat-to-data in Slack. A teammate can ask, “What was GB value in US&C in Q4 2024?” Finch interprets the question and retrieves the right data.

Self‑querying agents. Finch searches metadata across accessible tables, identifies the best source for a request and generates the SQL needed to answer it.

Built-in security and permissions. Role‑based permissions ensure that only authorised users can query sensitive financial data.

Seamless large-data exports. For larger result sets, Finch can export directly to Google Sheets so analysis continues in a familiar tool.

By combining natural language understanding, metadata‑aware query generation and governed access, Finch reduces complexity and shortens the path from question to answer.

Under the hood

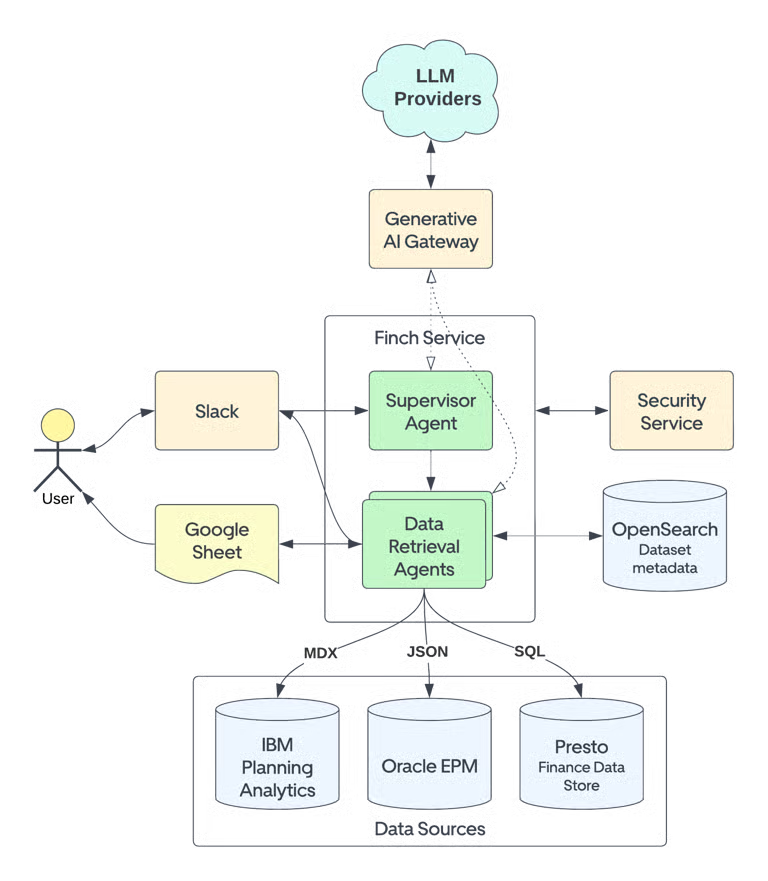

Finch is built on a modular framework that favors accuracy and clarity. It combines internal and third‑party technologies that fit Uber’s ecosystem.

What sets Finch apart is the approach to retrieval: curated, domain‑specific data marts and a semantic layer on metadata. Finch relies on a curated set of single‑table data marts that consolidate frequently accessed financial and operational metrics. The emphasis is deliberate: keep datasets simple, consistent and fast.

These datasets are optimised for simplicity. They reduce query complexity and lower round‑trips which makes it easier for the language model to produce a correct query and if needed, adjust based on feedback like error messages. The same datasets are structured and governed with controlled access so only authorised users can query specific financial dimensions.

Finch improves accuracy of WHERE filters by storing natural language aliases for both SQL columns and values in an OpenSearch index. Instead of relying only on schemas and a few sample rows, Finch can align user terms to the right field and the right enumeration. This closes a common gap for LLM‑powered SQL agents where ambiguity in filter terms leads to subtle errors.

Building blocks of Finch

Uber’s GenAI Gateway. Finch accesses self‑hosted and third‑party large language models through Uber’s GenAI Gateway. This makes it straightforward to swap models as capabilities improve.

LangGraph for orchestration. The team uses LangGraph to build and coordinate agents, including a Supervisor Agent and a SQL Writer Agent. Each agent focuses on a specific task and LangGraph ensures the right step happens in the right order.

OpenSearch index for metadata. Dataset metadata is stored in an index that includes SQL columns, value vocabularies and natural language aliases. This enables fuzzy matching between how people ask questions and how data is actually labeled.

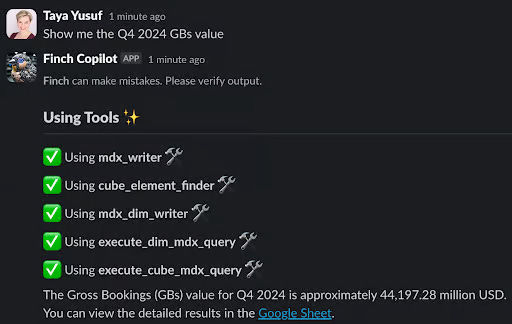

Slack integration. Finch connects to Slack through the Slack SDK. A callback handler in the LangGraph app updates Slack status messages in real time so users can see progress as the agent moves through each step. The team also uses Slack AI Assistant APIs for suggested starter questions, pinning Finch in the Slack app and an always‑open split pane that keeps the conversation in view.

From prompt to SQL to answer

Here is the flow for a typical request.

Ask the question. A finance teammate asks Finch a question in Slack.

Route to the right agent. The Supervisor Agent receives the query and routes it to the right sub‑agent such as the SQL Writer Agent based on the intent.

Pull the right metadata. Sub‑agents query the OpenSearch index for relevant metadata, including aliases for column names and values. This improves the model’s precision when building filters.

Build and run the SQL query. The SQL Writer Agent builds a query using the retrieved metadata and executes it against the appropriate source.

Show progress live. A Slack callback handler posts updates for each operation so the user can see what is happening.

Deliver the answer. Results are formatted and returned to Slack with the executed query and when applicable, a link to a Google Sheet with the results.

Where Finch fits

Finch is designed to fit cleanly into the broader stack. It integrates with internal data platforms such as Presto, IBM Planning Analytics and Oracle EPM. The same principles apply across these connections: consistent security, predictable performance and a single conversational surface in Slack for the finance organisation.

Finch user journey

Finch meets people where they work. Finance teams collaborate in Slack so Finch lives there too. Instead of logging into multiple systems, users ask questions in plain English.

For example, if an analyst types, “Show me the Q4 2024 GBs value”, Finch performs several steps to get the answer.

Route the request. The Supervisor Agent recognises a data retrieval request and routes it to the SQL Writer Agent.

Find the right data. The SQL Writer Agent:

Identifies the correct table by looking up words or phrases that could refer to a column or filter value.

Follows table‑specific instructions that include caveats or nuances.

Constructs the SQL needed to retrieve the values.

Validates the user’s permissions before execution.

If there is an error, rewrites the query in light of the error message.

Posting the Slack response. Finch posts a structured response with a short summary and a breakdown table. For example: “The Gross Bookings (GBs) value for Q4 2024 is approximately $44,197.28M USD.”

Reply further if needed. If the analyst replies, “Compare to Q4 2023.” Finch updates the query, fetches the comparable period, summarises the change and offers to export to Google Sheets.

This pattern repeats across many finance tasks. A conversational interface reduces friction, shortens the feedback loop and keeps the person in one tool while they iterate.

Reliability at scale

Finch is designed for speed and correctness at scale. To keep response quality high, the team runs continuous evaluation and targeted optimisation.

How they test it

Check sub-agent accuracy. Each sub‑agent, such as the SQL Writer or the Document Reader, is tested against expected responses. For data retrieval, the results of the generated query are compared with a golden query. There are often multiple correct ways to write the SQL so the focus is on the final answer.

Verify routing decisions. The team watches for intent collisions where the Supervisor Agent picks the wrong tool because the question could be handled by more than one. Presto and Oracle EPM both offer data retrieval for different use cases which makes routing accuracy important.

End‑to‑end validation. Simulated real‑world queries exercise the full path to catch issues that only appear when systems interact.

Regression testing. Historical queries are re‑run before updates to prompts or models. This helps detect drift and protects known‑good behavior.

The iteration loop is the point. Finch should return correct financial insights consistently even as the underlying models and prompts evolve.

How they make it fast

Finch minimises database load by optimising SQL, executes sub‑tasks in parallel where appropriate and prefetches frequently accessed metrics. Those choices reduce latency and help the system respond under higher demand without sacrificing clarity or control.

Finch continues to improve on both speed and accuracy so finance gets real‑time answers with minimal wait and reliable behavior.

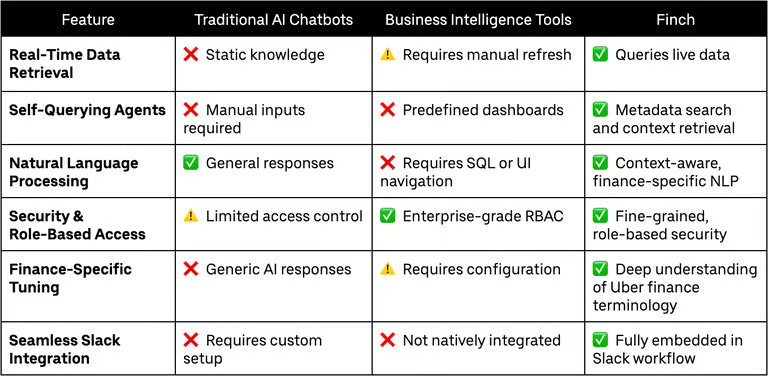

How is it different from other chatbots

Finch is built for Uber’s finance teams. It provides real‑time, AI‑powered insights with security, automation and scale. Unlike a generic chatbot or a traditional BI tool, Finch combines structured retrieval, self‑querying behavior and enterprise access controls.

Query live data. Finch retrieves up‑to‑date financial data. A static chatbot relies on pre‑trained knowledge.

Plan queries automatically. Traditional BI often requires manual SQL or preset dashboards. Finch determines the query plan on the fly.

Work where the teams already are. Finch lives in Slack on desktop and mobile. That keeps analysis in the same place where conversations happen which is useful for teammates who do not write SQL.

Protect sensitive information. Enterprise authentication, granular permissions and query validation keep sensitive data protected.

Wrapping it up

Finch changes how finance teams at Uber interact with data. It makes insights more accessible, secure and real time. By combining GenAI, self‑querying agents and enterprise‑grade security, Finch removes the usual delays of traditional retrieval so people can focus on decisions rather than queries.

With Finch, decision-making moves forward more quickly, confidently and with clarity.

Lessons learned

Meet people where they already work: Putting the agent in Slack cut the alt-tab Olympics. Adoption isn’t a training problem when the interface is the chat window everyone lives in.

Treat metadata as product, not paperwork: Storing column and value aliases in OpenSearch turned vague human language into precise filters. WHERE clauses stopped being landmines.

Orchestrate agents like a team, not a monolith: A Supervisor routes intent. A SQL Writer does the heavy lifting. Smaller, specialised agents are easier to test, debug and upgrade.

Make the answer portable: One-click export to Google Sheets kept downstream analysis in the team’s flow. The best result is the one users can immediately work with.

The full scoop

To learn more about this, check Uber's Engineering Blog post on this topic

If you are already subscribed and enjoyed the article, please give it a like and/or share it others, really appreciate it 🙏

Keep learning

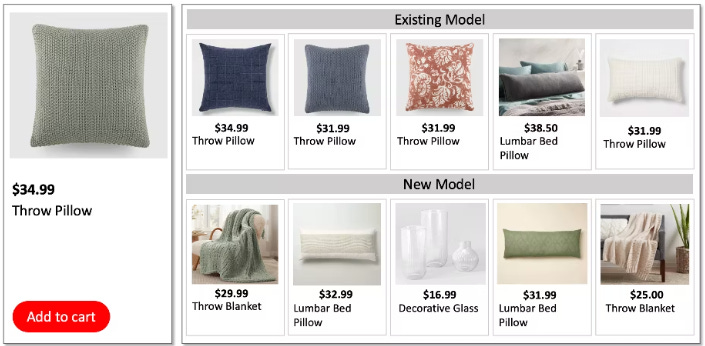

How Target Used GenAI to Lift Sales by 9% Across 100K+ Products

“You might also need …” sounds easy until you try to build it.

Target built a GenAI-powered recommender that dynamically scores 100K+ accessories based on relevance, style and aesthetics.

The result? 9% increase in sales

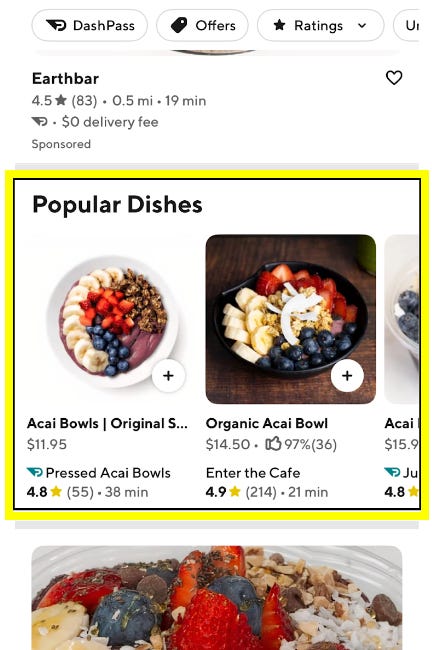

How DoorDash Used LLMs to Trigger 30% More Relevant Results

How do you handle search queries like “low-carb spicy chicken wrap with gluten-free tortilla” at scale?

DoorDash rebuilt its search pipeline to better understand both user intent and product metadata. The result? A 30% increase in relevant results and measurable gains across key engagement metrics.

This post breaks down the hybrid approach they used; combining LLMs, structured taxonomies and real-time retrieval without sacrificing speed or accuracy.

This is great detailed article.