What the Data Crowd Was Reading in October 2025

Tools, techniques and deep dives worth reading that I came across in October 2025.

Fellow Data Tinkerers

It’s time for another round-up on all things data!

But before that, I wanted to share with you what you could unlock if you share Data Tinkerer with just 1 more person.

There are 100+ resources to learn all things data (science, engineering, analysis). It includes videos, courses, projects and can be filtered by tech stack (Python, SQL, Spark and etc), skill level (Beginner, Intermediate and so on) provider name or free/paid. So if you know other people who like staying up to date on all things data, please share Data Tinkerer with them!

Without further ado, let’s get to the round up for October.

Data science & AI

How does gradient descent work? (24 minute read)

Alex Damian and Jeremy Cohen show that gradient descent stays stable by self-regulating sharpness through oscillations, formalized by a new ‘central flow’ that explains why deep learning works at the edge of stability.The Model Selection Showdown: 6 Considerations for Choosing the Best Model (7 minute read)

This article covers six practical steps for model selection that works on messy real-world data.Recursive Language Models (26 minute read)

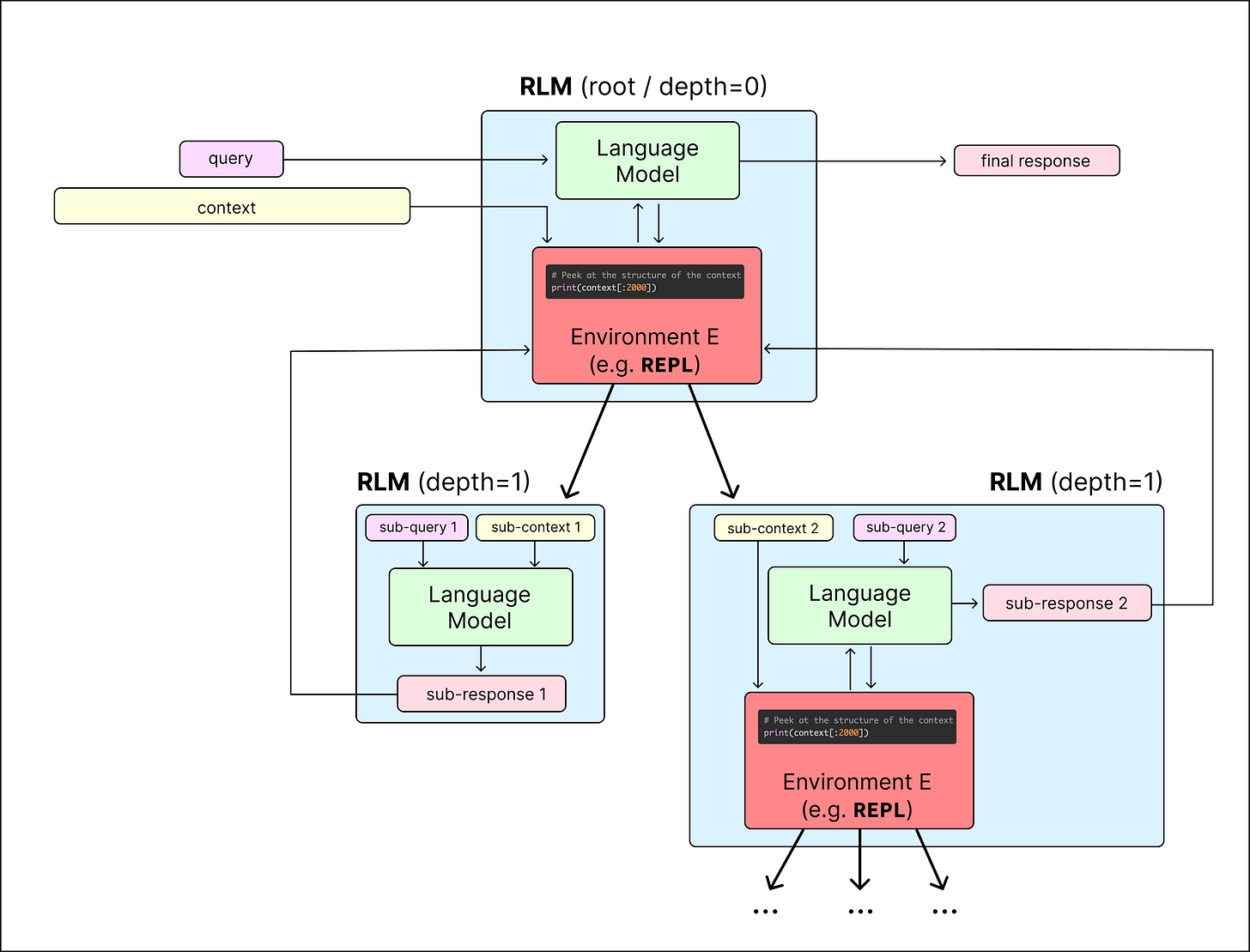

Alex Zhang and Omar Khattab introduce Recursive Language Models, where LLMs recursively call themselves through a REPL to handle unbounded context, outperforming GPT-5 on long-context tasks while cutting cost and context rot.Are Foundation Models Ready for Your Production Tabular Data? (14 minute read)

This post dives into how tabular foundation models work, how to use them and why they shine on small/medium tables but still trail boosted trees at true production scale.Why analytics agents break differently (8 minute read)

Ravit Shrivastav explains how Hex’s Notebook Agent tackles analytics-specific context challenges using token budgets, explicit truncation and graph-aware design to make AI reason effectively over data.The Continual Learning Problem (11 minute read)

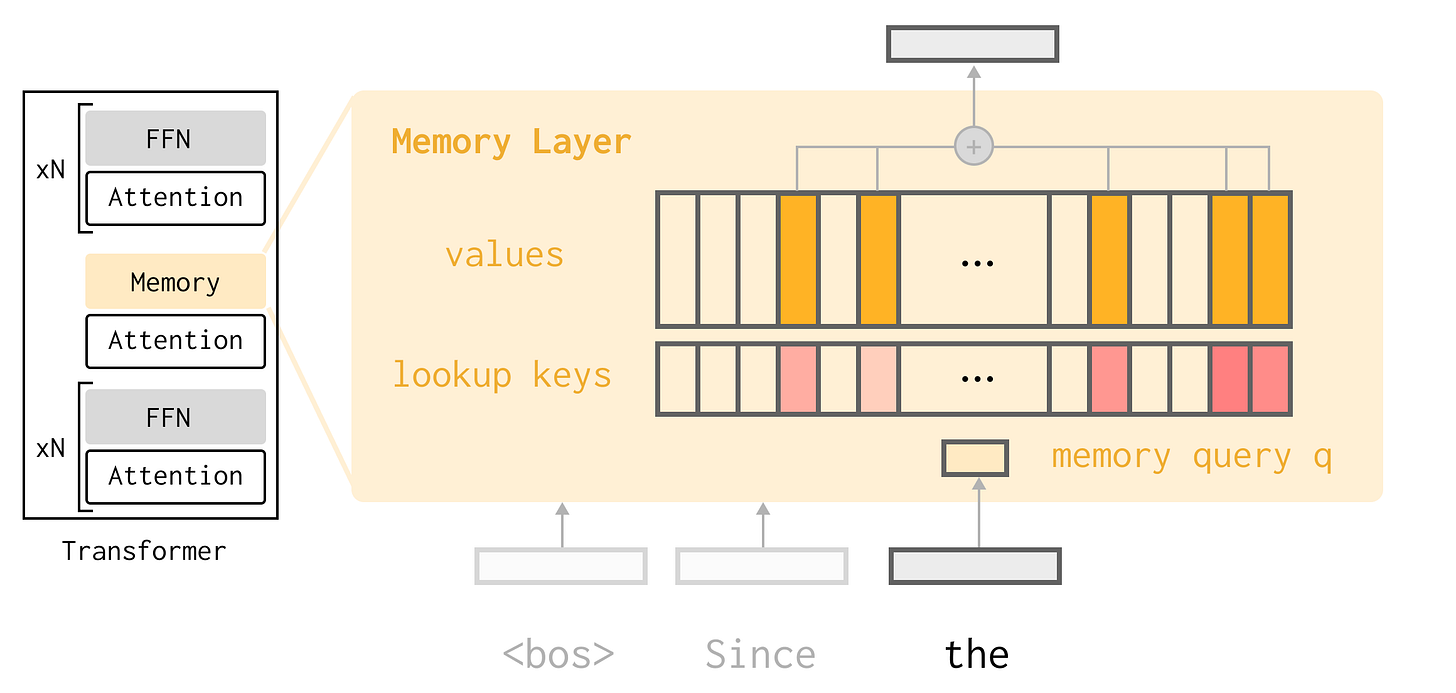

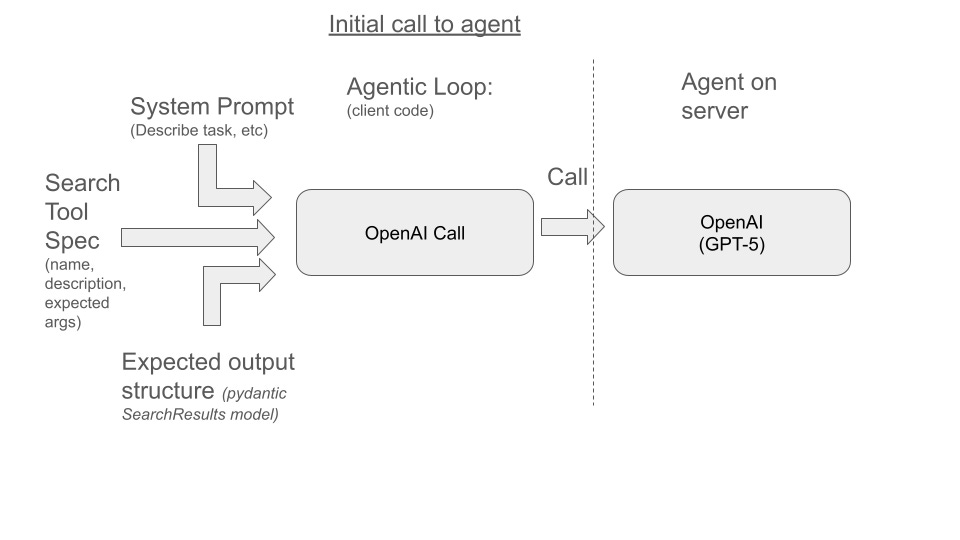

Jessy Lin argues that sparse memory finetuning lets models continually learn new facts with minimal forgetting, outperforming LoRA and full finetuning by a wide margin.Designing agentic loops (8 minute read)

Simon Willison explains that mastering coding agents like Claude Code means learning to design ‘agentic loops’ where AI can iteratively run, test and refine code toward a clear goal.Reasoning boosts search relevance 15-30% (10 minute read)

Doug Turnbull shows that reasoning-driven agents can boost simple BM25 search relevance by 15–30%, proving that agentic loops with lightweight, transparent search tools outperform traditional complex retrieval systems.LLMs are getting better at character-level text manipulation (7 minute read)

Tomáš Burkert finds that GPT-5-era models can now handle precise character-level tasks and decode ciphers, suggesting they’ve learned real text mechanics rather than just token tricks.Do AIs think differently in different languages? (12 minute read)

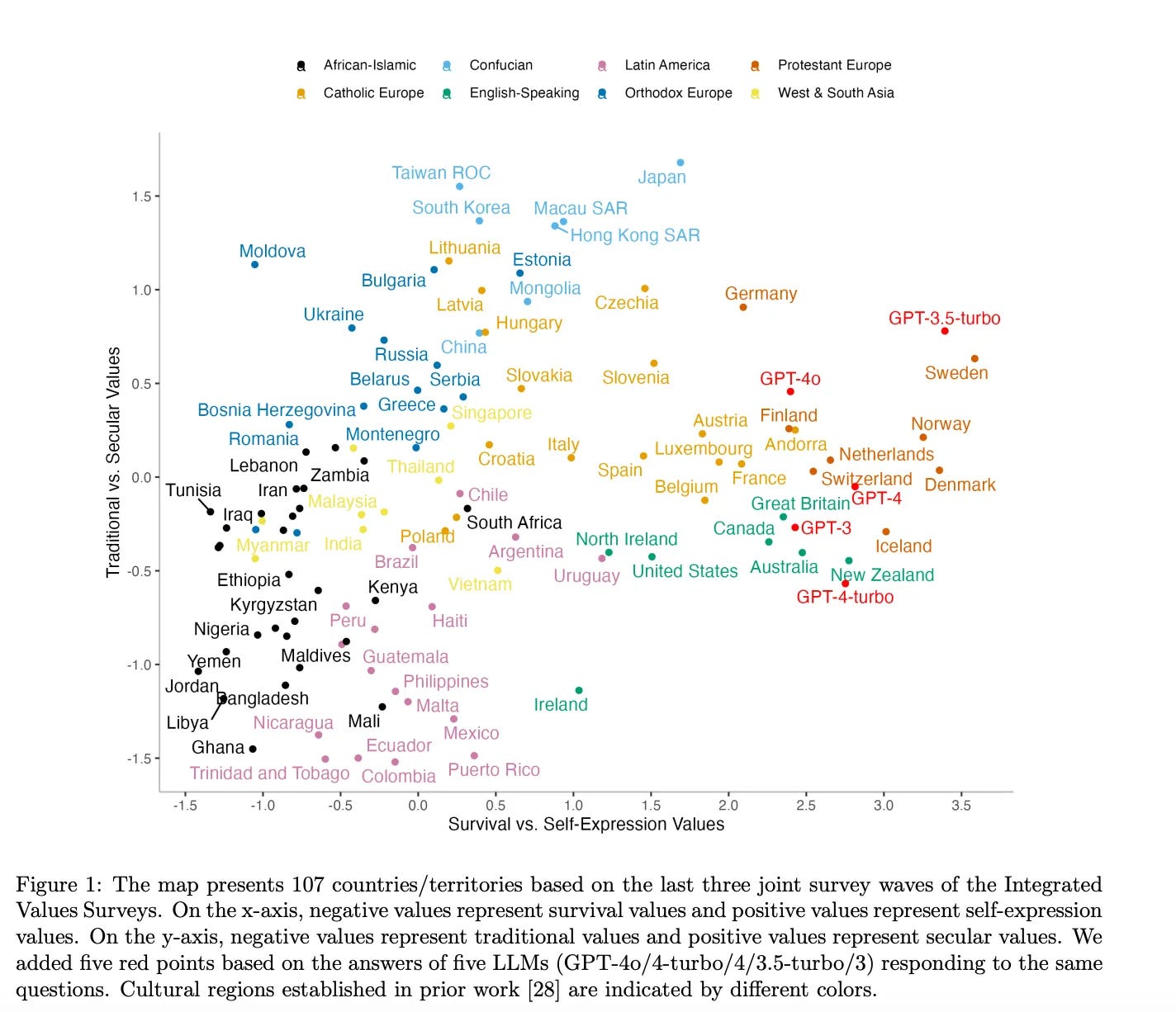

Kelsey Piper finds that AI models think mostly in English and express consistent liberal values across languages, showing language barely changes their worldview.

Data engineering

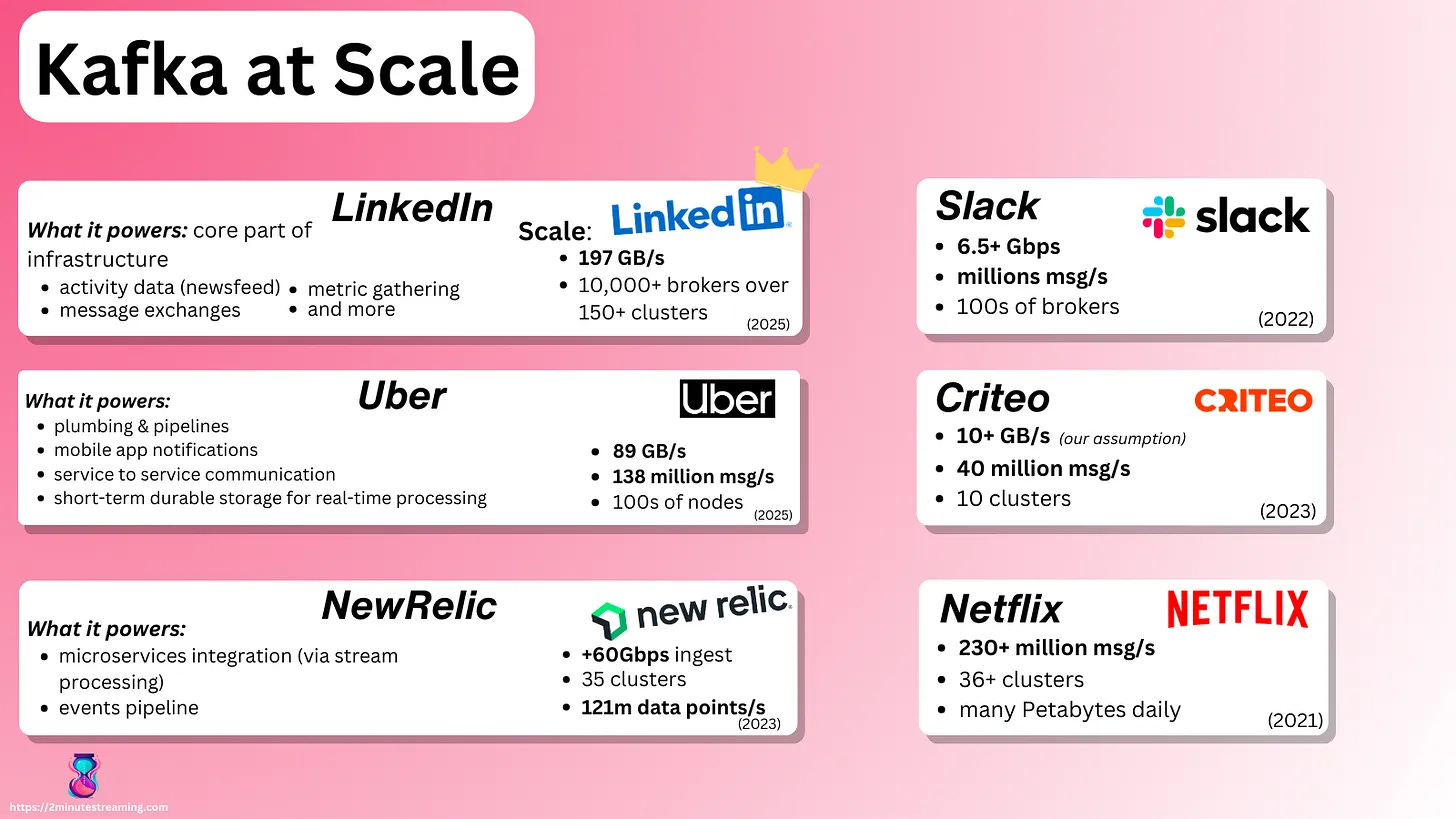

How Kafka Works (33 minute read)

Stanislav Kozlovski and Neo Kim give a deep yet practical walkthrough of Kafka’s internals, showing how it powers durable, scalable and real-time data systems.Switching me Softly (17 minute read)

Anton Borisov shows how Fresha pulled off zero-downtime Postgres 12 to 17 upgrades that scaled to 20+ prod DBs.Practical Guide to Semantic Layers: From Definition to Demo (5 minute read)

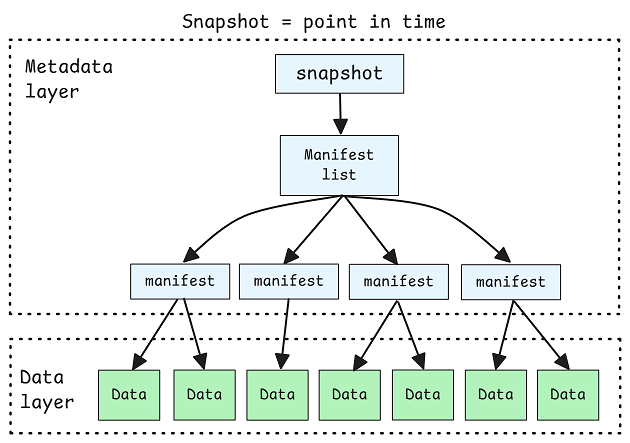

Rasmus Engelbrecht shows how to unify metrics with a semantic layer demo using Boring Semantic Layer, DuckDB and Streamlit to turn YAML-defined logic into consistent, auto-generated SQL.Beyond Indexes: How Open Table Formats Optimize Query Performance (26 minute read)

Jack Vanlightly explains why open table formats like Iceberg and Delta rely on layout, pruning and metadata to optimize analytical query performance.

Thinking Like a Data Engineer (9 minute read)

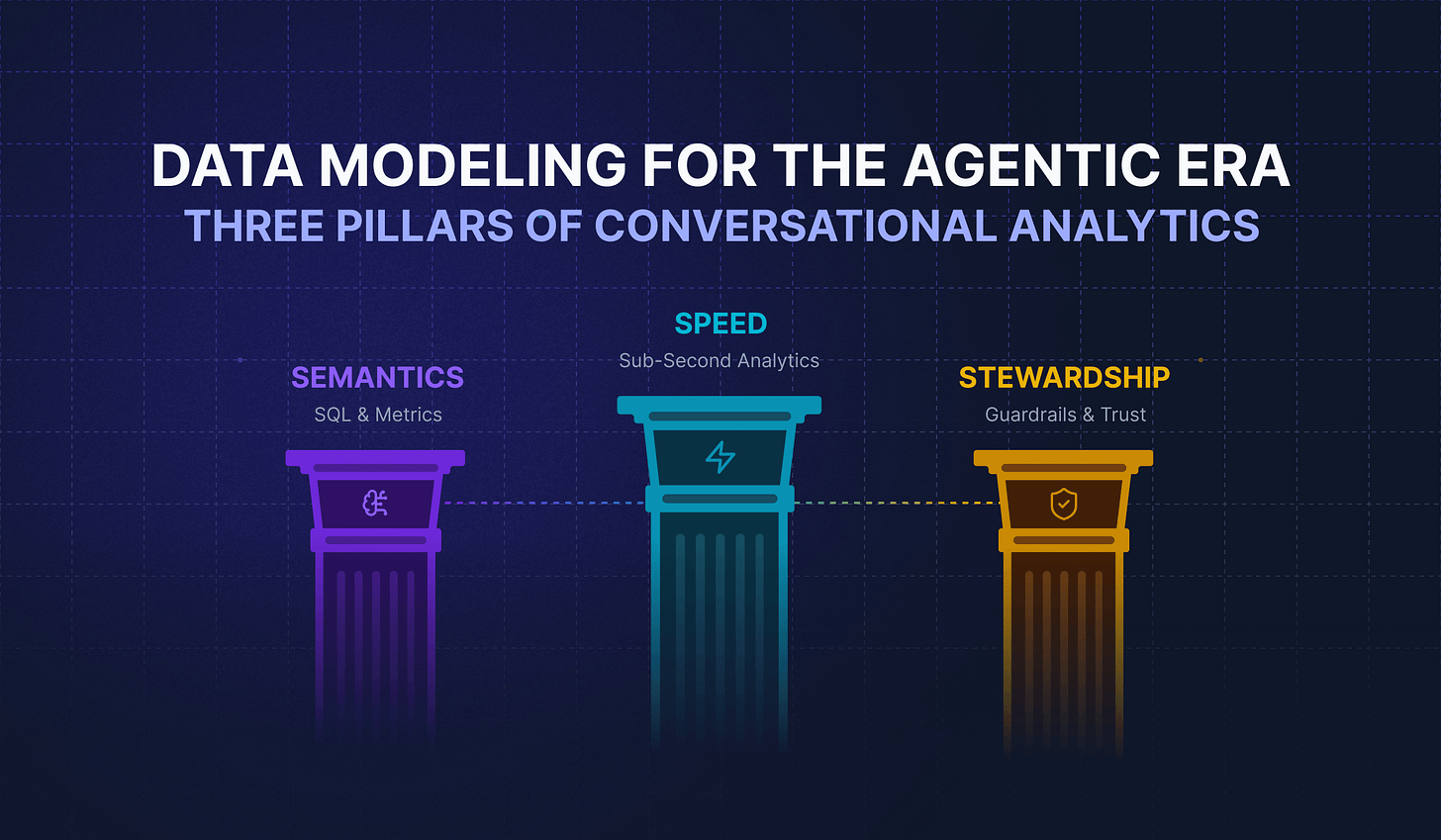

Ananth Packkildurai shares lessons from mentors that shaped his mindset, showing that true data engineering is about curiosity, observation and confidence, not just code.Data Modeling for the Agentic Era: Semantics, Speed, and Stewardship (28 minute read)

Simon Späti shows how semantics, speed and stewardship form the foundation of agentic data modeling, using Metrics SQL and Rill to build fast, trustworthy, human-guided analytics workflows.Why You’ll Never Have a FAANG Data Infrastructure and That’s the Point (12 minute read)

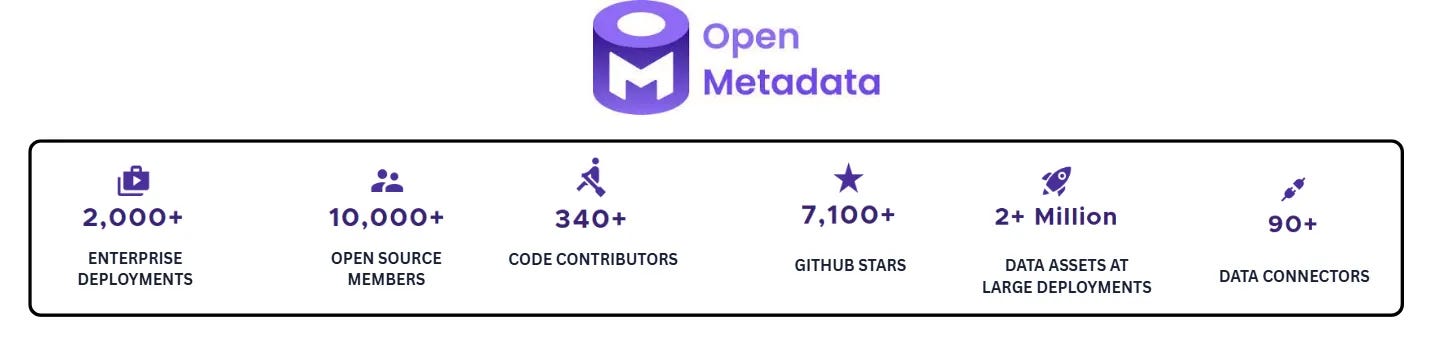

Travis Thompson explains why companies shouldn’t replicate FAANG data stacks but instead adopt their design principles to achieve similar outcomes with less cost and complexity.Getting Started with OpenMetadata: An Open-Source Data Catalogue Solution (8 minute read)

Erfan Hesami shares how OpenMetadata brings order to messy data ecosystems by unifying discovery, governance and collaboration in one open-source platform for modern data teams.

From Marketing to Data Engineering: How I Made the Switch (8 minute read)

Alejandro Aboy talks about his path from marketing to data engineering, why his teammates call him an octopus and his take that “big data” is a myth for most teams.

Data analysis and visualisation

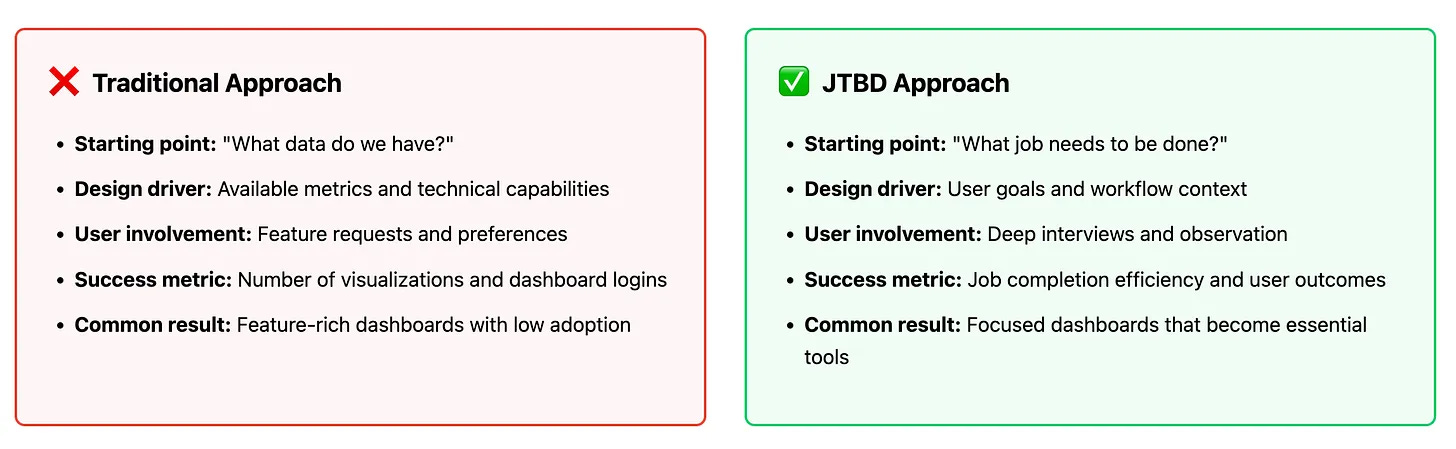

Jobs-to-be-Done: A User-Centered Approach to Dashboard Design (8 minute read)

Anastasiya Kuznetsova argues dashboards should be built for what users are trying to achieve and not just what they can see because data is only useful when it helps people get their job done.

From Dental Cleaning to Data Cleaning: How I Pivoted to Healthcare Analytics (9 minute read)

Thais Cooke talks about her unplanned pivot into data, what healthcare data analysis look like and how she thought she was being scammed by a LinkedIn ‘Impostor’.

Other interesting reads

Real AI Agents and Real Work (8 minute read)

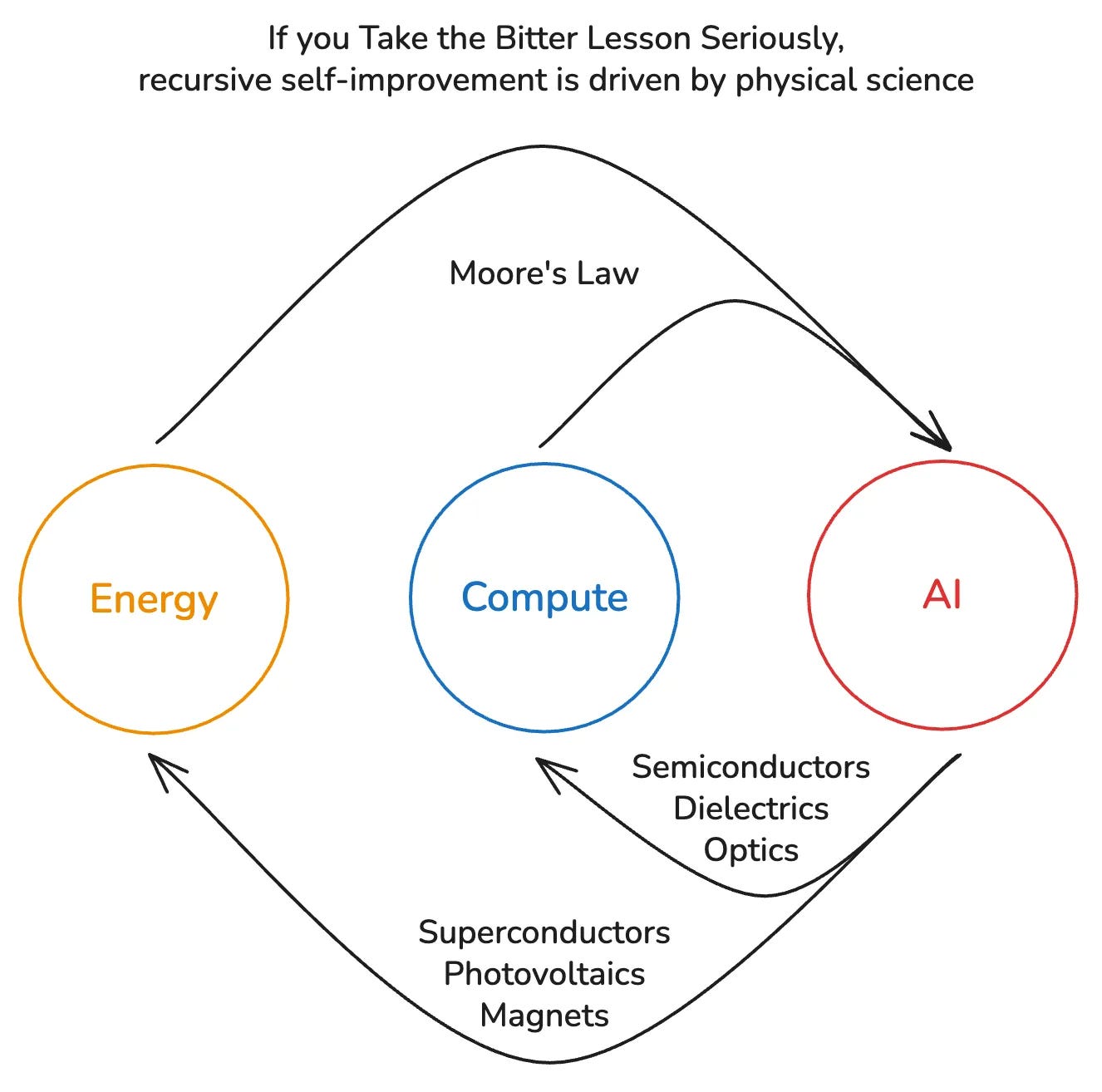

Ethan Mollick explains how AI agents can now perform real, valuable work but warns that their impact depends on human judgment, not just automation.Taking the Bitter Lesson Seriously (4 minute read)

Rohan Pandey argues that advancing AI requires letting it improve compute and energy through autonomous scientific discovery, not just scaling algorithms.OpenAI is a consumer company (8 minute read)

Vikram Sreekanti and Joseph E. Gonzalez argue that OpenAI’s DevDay showed a clear shift from developer tools to consumer products and positioning ChatGPT as a consumer platform rather than a developer-first company.Import AI 431: Technological Optimism and Appropriate Fear (24 minute read)

Jack Clark argues that AI is advancing fast enough to warrant both awe and fear and society must respond with transparency, public dialogue and readiness for inevitable automation.

Quick favor - need your take

Was there any standout article or topic from October I missed? Feel free to drop a comment or hit reply, even a quick line helps.

If you are already subscribed and enjoyed the article, please give it a like and/or share it others, really appreciate it 🙏

As always thank you so much for the shout out.💐🙏